Tutorial

tutorial.RmdTwo datasets simdata and turnout are shipped with the gsynth package. Load these two datasets:

library(gsynth)

#> ## Syntax has been updated since v.1.2.0.

#> ## Comments and suggestions -> yiqingxu@stanford.edu.

data(gsynth)

ls()

#> [1] "simdata" "turnout"Multiperiod DID

We start with the first example, a simulated dataset described in the paper. There are 5 treated units, 45 control units, and 30 time periods. The treatment kicks at Period 21 for all treated units, hence, a multiperiod DID set up.

head(simdata)

#> id time Y D X1 X2 eff error mu alpha

#> 1 101 1 6.210998 0 0.3776736 -0.1732470 0 0.2982276 5 -0.06052364

#> 2 101 2 4.027106 0 1.7332009 -0.4945009 0 0.6365697 5 -0.06052364

#> 3 101 3 8.877187 0 1.8580159 0.4984432 0 -0.4837806 5 -0.06052364

#> 4 101 4 11.515346 0 1.3943369 1.1272713 0 0.5168620 5 -0.06052364

#> 5 101 5 5.971526 0 2.3636963 -0.1535215 0 0.3689645 5 -0.06052364

#> 6 101 6 8.237905 0 0.5370867 0.8774397 0 -0.2153805 5 -0.06052364

#> xi F1 L1 F2 L2

#> 1 1.1313372 0.25331851 -0.04303273 0.005764186 -0.8804667

#> 2 -1.4606401 -0.02854676 -0.04303273 0.385280401 -0.8804667

#> 3 0.7399475 -0.04287046 -0.04303273 -0.370660032 -0.8804667

#> 4 1.9091036 1.36860228 -0.04303273 0.644376549 -0.8804667

#> 5 -1.4438932 -0.22577099 -0.04303273 -0.220486562 -0.8804667

#> 6 0.7017843 1.51647060 -0.04303273 0.331781964 -0.8804667Visualizing data

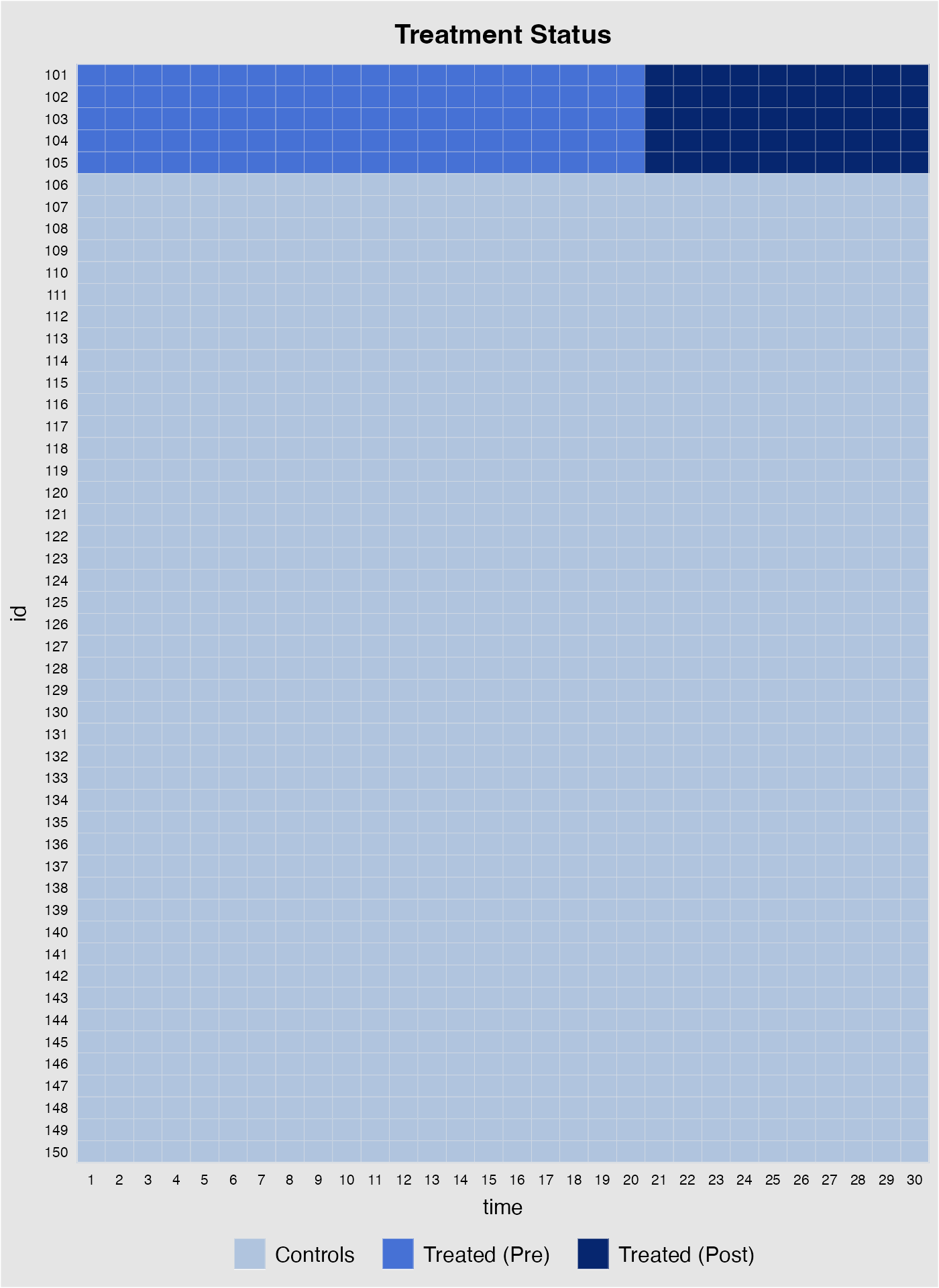

Before we conduct any statistical analysis, it is helpful to visualize the data structure and/or spot missing values (if there are any). We can easily do so with the help of the panelView package. The following figure shows that: (1) there are 5 treated units and 45 control units; (2) the treated units start to be treated in period 21; and (3) there are no missing values, which is a rare case.

## devtools::install_github('xuyiqing/panelView') # if not already installed

library(panelView)

#> ## See bit.ly/panelview4r for more info.

#> ## Report bugs -> yiqingxu@stanford.edu.

panelview(Y ~ D, data = simdata, index = c("id","time"), pre.post = TRUE)

The following line of code visualizes the outcome variable; different colors correspond to different treatment status.

Estimation

We estimate the model only using information of the outcome variable \(Y\), the treatment indicator \(D\), and two observed covariates \(X_{1}\) and \(X_{2}\) (as well as the group indicators \(id\) and \(time\)).

Now we formally run the gsynth algorithm proposed by Xu (2017). The first variable on the right-hand-side of the formula is a binary treatment indicator. The rest of the right-hand-side variables serve as controls. The index option specifies the unit and time indicators.

The force option (“none,” “unit,” “time,” and “two-way”) specifies the additive component(s) of the fixed effects included in the model. The default option is “unit” (including additive unit fixed effects). A cross-validation procedure is provided (when CV = TRUE) to select the number of unobserved factors within the interval of r=c(0,5). When cross-validation is switched off, the first element in r will be set as the number of factors.

When the uncertainty estimates are needed, set se = TRUE. When the number of treated units is small, a parametric bootstrap procedure is preferred. Alternatively, one can use a non-parametric bootstrap procedure by setting inference = "nonparametric"; it only works well when the treatment group is relatively large, e.g. \(N_{tr}> 40\)). The number of bootstrap runs is set by nboots.

When dealing with clustered data, for example, panel data with regionally clustered units, one can use the cluster bootstrap procedure by specifying name of the cluster variable in option cl. For example, cl = "state" for city-level data.

system.time(

out <- gsynth(Y ~ D + X1 + X2, data = simdata,

index = c("id","time"), force = "two-way",

CV = TRUE, r = c(0, 5), se = TRUE,

inference = "parametric", nboots = 1000,

parallel = FALSE)

)

#> Cross-validating ...

#> r = 0; sigma2 = 1.84865; IC = 1.02023; PC = 1.74458; MSPE = 2.37280

#> r = 1; sigma2 = 1.51541; IC = 1.20588; PC = 1.99818; MSPE = 1.71743

#> r = 2; sigma2 = 0.99737; IC = 1.16130; PC = 1.69046; MSPE = 1.14540*

#> r = 3; sigma2 = 0.94664; IC = 1.47216; PC = 1.96215; MSPE = 1.15032

#> r = 4; sigma2 = 0.89411; IC = 1.76745; PC = 2.19241; MSPE = 1.21397

#> r = 5; sigma2 = 0.85060; IC = 2.05928; PC = 2.40964; MSPE = 1.23876

#>

#> r* = 2

#>

#>

Simulating errors .............

Bootstrapping ...

#> ..........

#> user system elapsed

#> 7.875 0.055 7.991Because the core function of gsynth is written in C++, the algorithm runs relatively fast. The entire procedure (including cross-validation and 1,000 bootstrap runs) takes about 6 seconds on an iMac (2016 model).

The algorithm prints out the results automatically. sigma2 stands for the estimated variance of the error term; IC represents the Bayesian Information Criterion; and MPSE is the Mean Squared Prediction Error. The cross-validation procedure selects an \(r\) that minimizes the MSPE.

Users can use the print function to visualize the result or retrieve the numbers by directly accessing the gsynth object. est.att reports the average treatment effect on the treated (ATT) by period; est.avg shows the ATT averaged over all periods; and est.beta reports the coefficients of the time-varying covariates. Treatment effect estimates from each bootstrap run is stored in eff.boot, an array whose dimension = (#time periods * #treated * #bootstrap runs).

print(out)

out$est.att

out$est.avg

out$est.betaParallel computing will speed up the bootstrap procedure significantly. When parallel = TRUE (default) and cores options are omitted, the algorithm will detect the number of available cores on your computer automatrically. (Warning: it may consume most of your computer’s computational power if all cores are being used.)

system.time(

out <- gsynth(Y ~ D + X1 + X2, data = simdata,

index = c("id","time"), force = "two-way",

CV = TRUE, r = c(0, 5), se = TRUE,

inference = "parametric", nboots = 1000,

parallel = TRUE, cores = 4)

)

#> Parallel computing ...

#> Cross-validating ...

#> r = 0; sigma2 = 1.84865; IC = 1.02023; PC = 1.74458; MSPE = 2.37280

#> r = 1; sigma2 = 1.51541; IC = 1.20588; PC = 1.99818; MSPE = 1.71743

#> r = 2; sigma2 = 0.99737; IC = 1.16130; PC = 1.69046; MSPE = 1.14540*

#> r = 3; sigma2 = 0.94664; IC = 1.47216; PC = 1.96215; MSPE = 1.15032

#> r = 4; sigma2 = 0.89411; IC = 1.76745; PC = 2.19241; MSPE = 1.21397

#> r = 5; sigma2 = 0.85060; IC = 2.05928; PC = 2.40964; MSPE = 1.23876

#>

#> r* = 2

#>

#>

Simulating errors ...

Bootstrapping ...

#>

#> user system elapsed

#> 0.848 0.122 4.768gsynth also incoporates jackknife method for uncertainty estimates.

out2 <- gsynth(Y ~ D + X1 + X2, data = simdata,

index = c("id","time"), force = "two-way",

CV = FALSE, r = c(2, 5), se = TRUE,

inference = "jackknife",

parallel = TRUE, cores = 4)

#> Parallel computing ...

#>

Jackknifing ...

#> Sometimes researchers may be interested in cumulative treatment effects like cumulative abnormal returns in event study. In this version, we add a simple function cumuEff to calculate cumulative treatment effects. One can specify a period of interest in option period. If left blank, cumulative treatment effects for all post-treatment period will be calculated.

cumu1 <- cumuEff(out, cumu = TRUE, id = NULL, period = c(0,5))

cumu1$est.catt

#> CATT S.E. CI.lower CI.upper p.value

#> 0 -0.003308833 0.3425545 -0.5967511 0.7245332 0.864

#> 1 1.232653177 0.8697420 -0.4072834 2.8596959 0.154

#> 2 2.862917489 1.1003841 0.6816032 4.7945799 0.012

#> 3 5.575095192 1.2718741 3.0262651 7.9543223 0.000

#> 4 9.041852883 1.6647135 5.7551097 11.9019985 0.000

#> 5 14.781985192 1.9723211 10.8890541 18.2833883 0.000One can also calculate average treatment effect for a sub-group by specifying unit names in option id. By specifying cumu = FALSE, average treatment effects (rather than cumulative effects) at each period will be returned. Note that in this case, parametric bootstrap procedure is needed for uncertainty estimates.

cumu2 <- cumuEff(out, cumu = FALSE, id = c(101, 102, 103), period = c(0,5))

cumu2$est.catt

#> CATT S.E. CI.lower CI.upper p.value

#> 0 -0.2277091 0.4097594 -0.9722389 0.6112413 0.580

#> 1 2.2491804 0.8555241 0.5299843 3.8894070 0.012

#> 2 2.3930760 0.7070076 0.9513236 3.7711897 0.000

#> 3 2.3067796 0.6935023 0.9707975 3.7067215 0.000

#> 4 2.5812540 0.8911715 0.7169348 4.2338789 0.010

#> 5 4.7445071 0.6918054 3.2598528 5.9911281 0.000Plot results

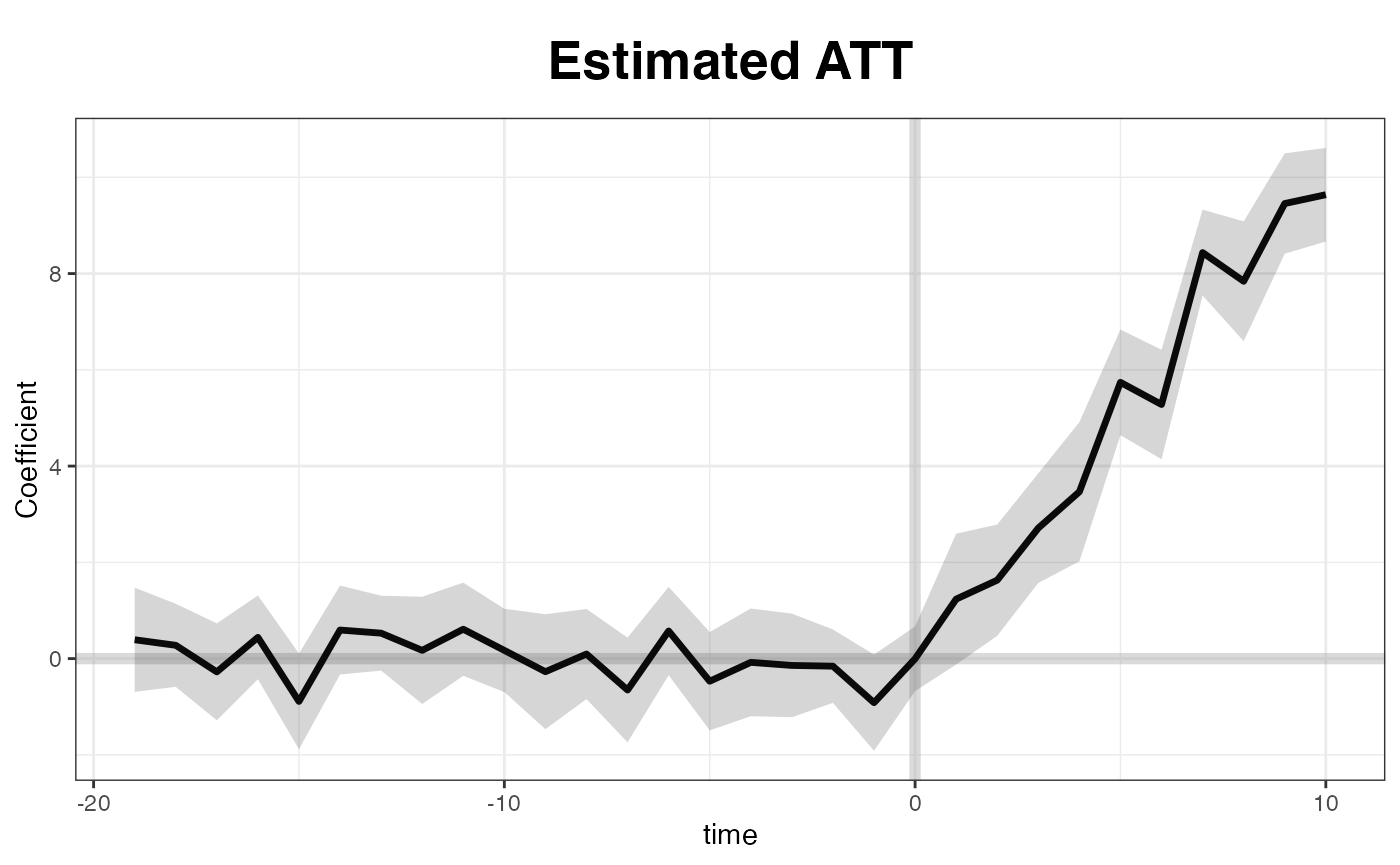

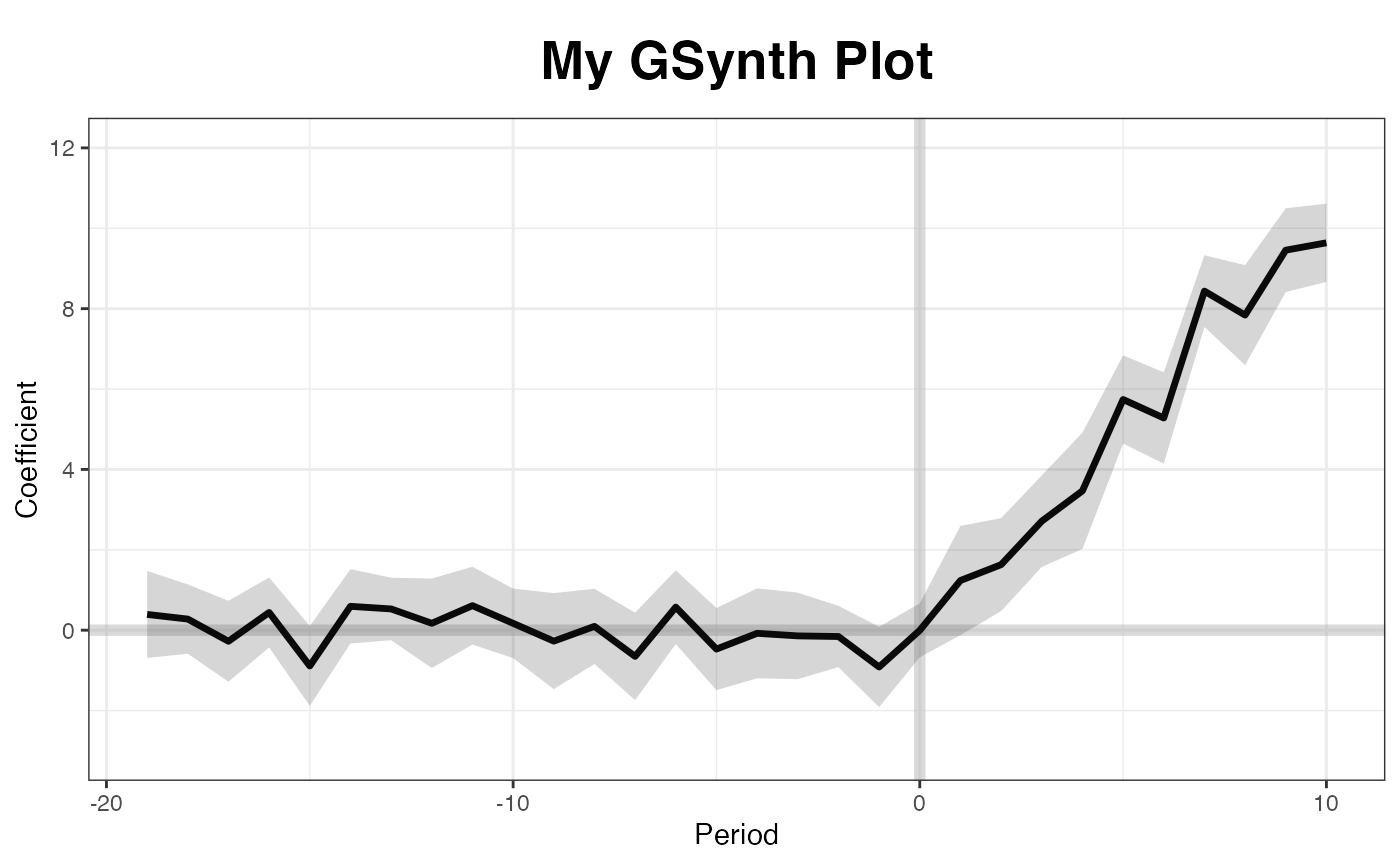

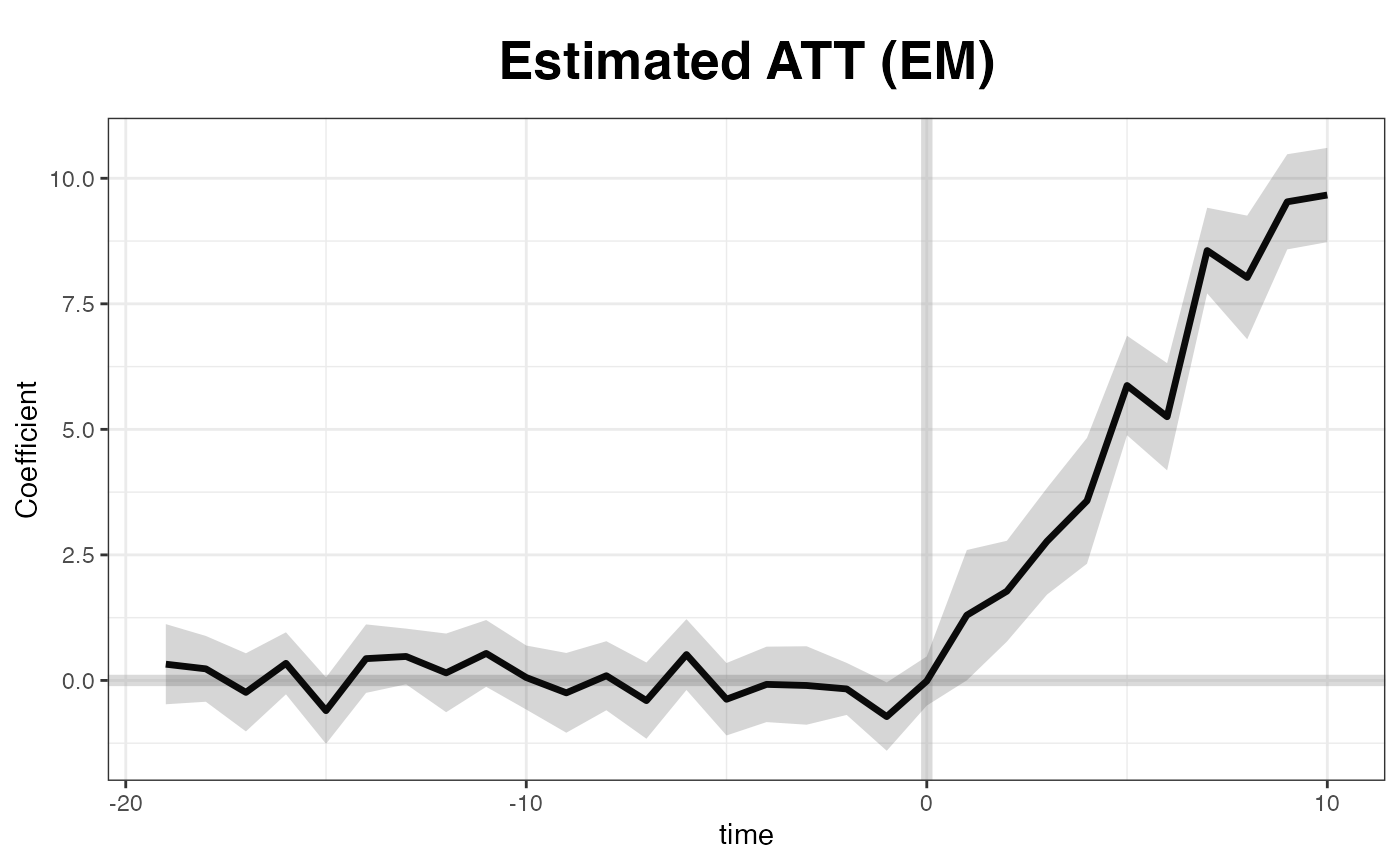

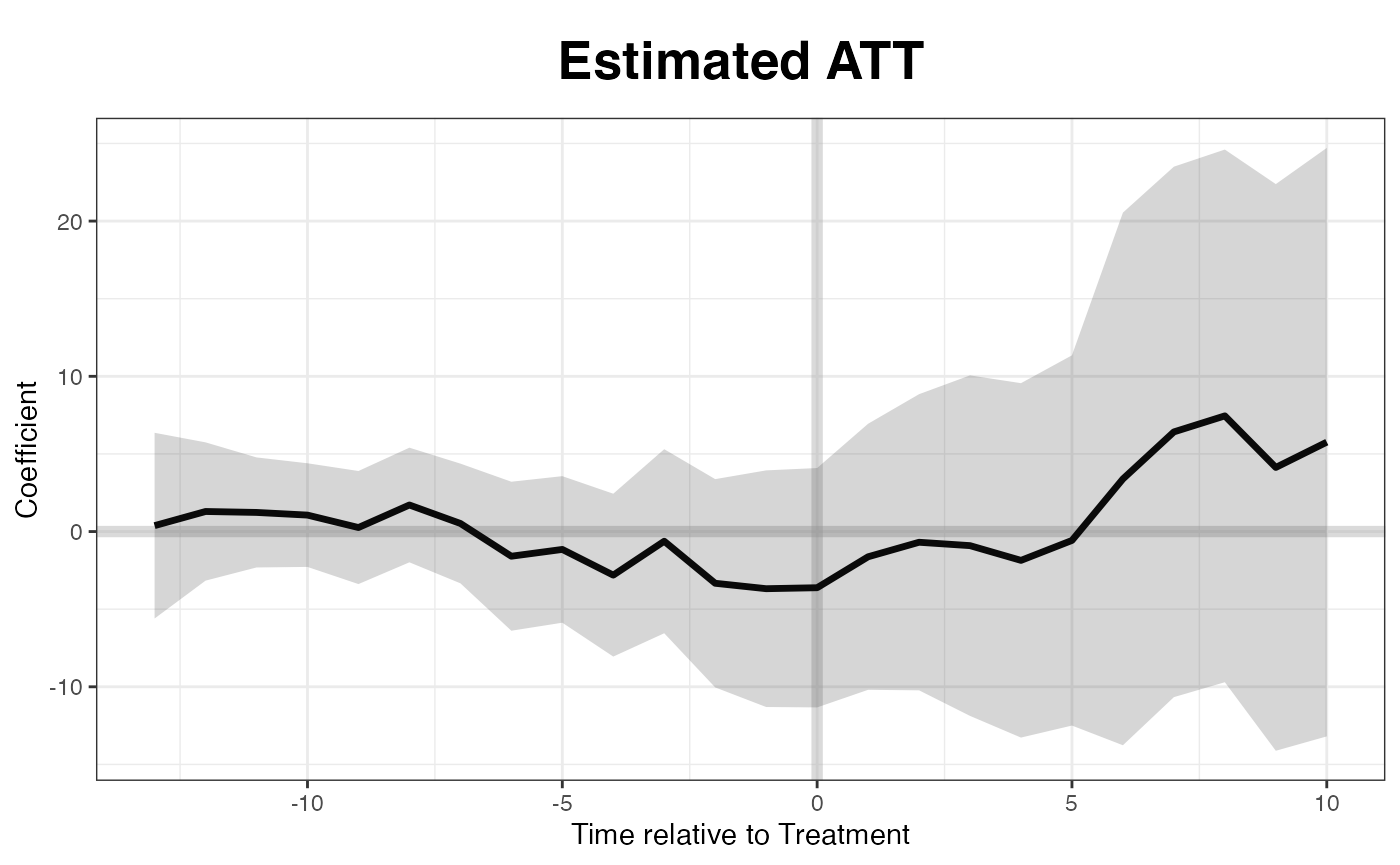

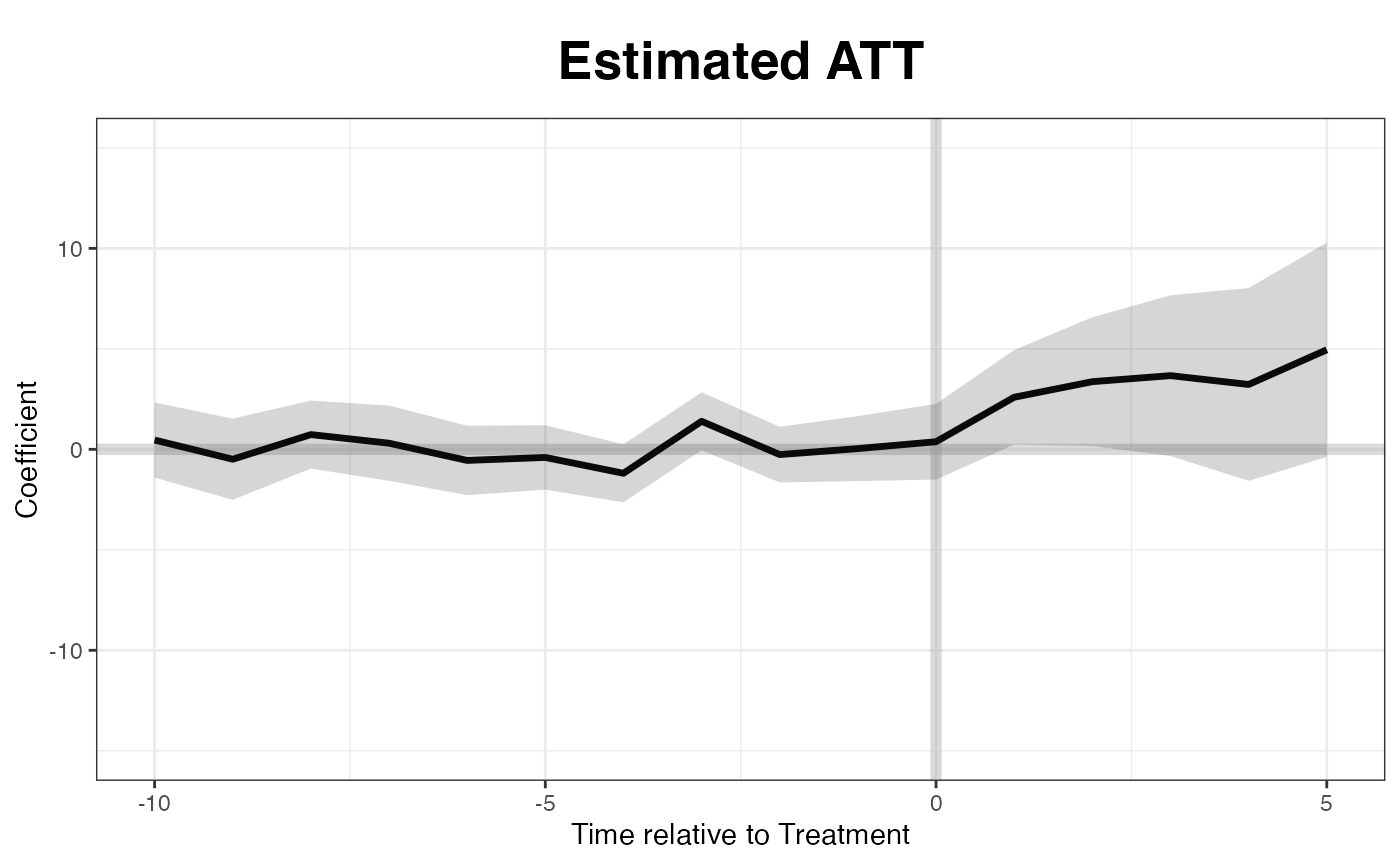

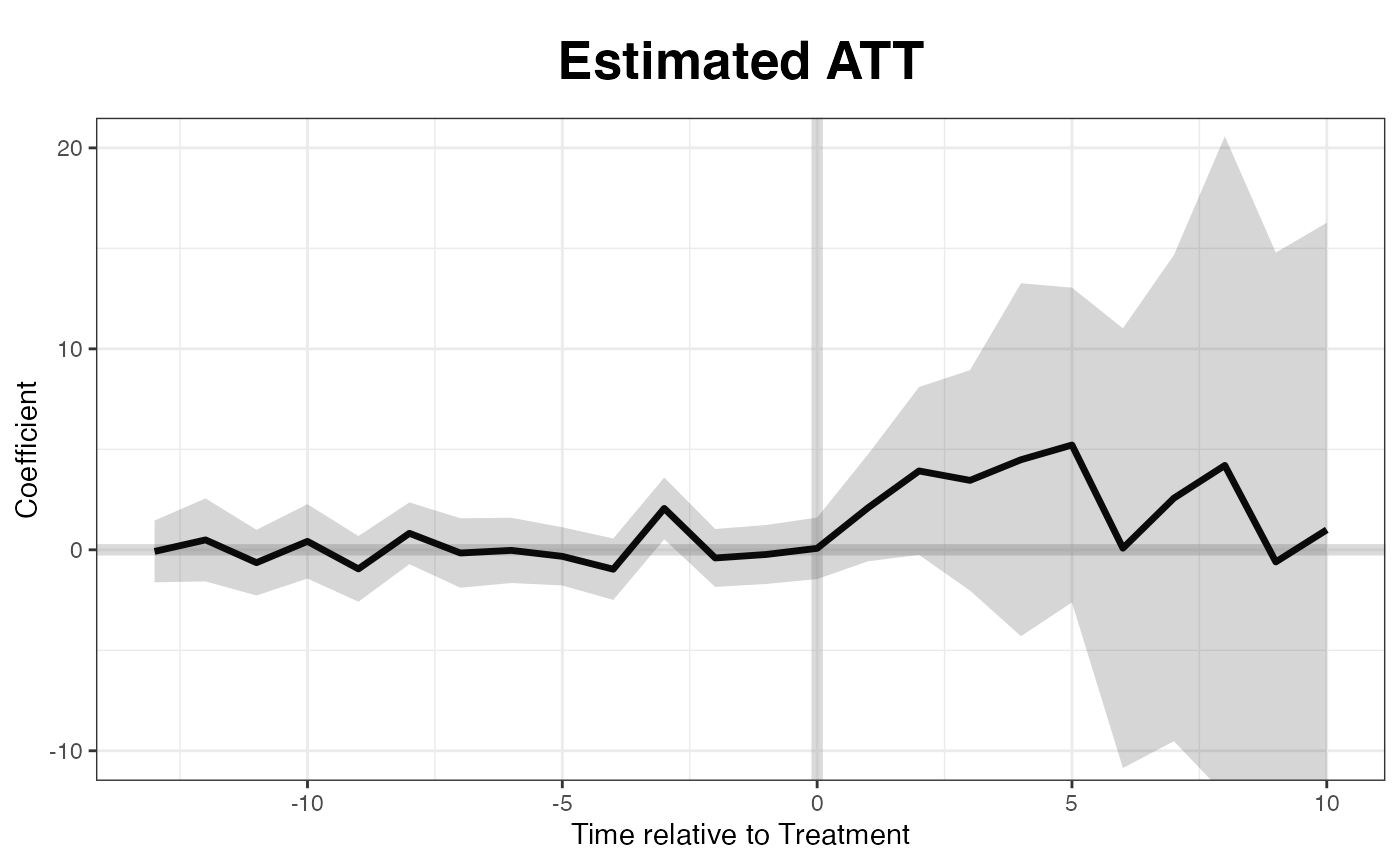

By default, the plot function produce a “gap” plot – as if we type plot(out, type = "gap") – which visualize the estimated ATT by period. For your reference, the true effects in simdata go from 1 to 10 (plus some white noise) from period 21 to 30. You can save the graph, a ggplot2 object, by directing print to an object name.

plot(out) # by default

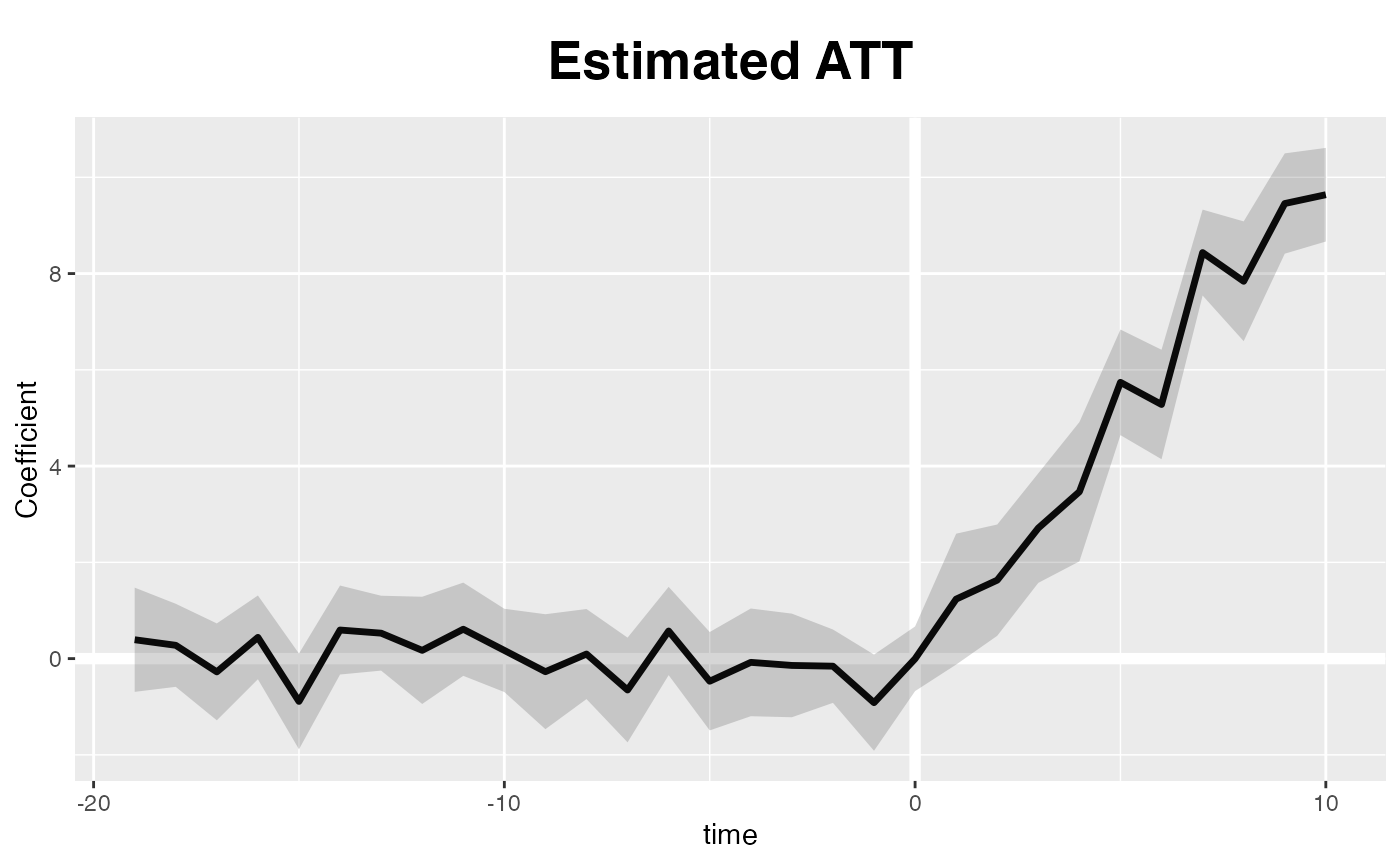

A default ggplot2 theme is available, too:

plot(out, theme.bw = FALSE)

Users can adjust xlim and ylim, and supply the plot title and titles for the two axes.

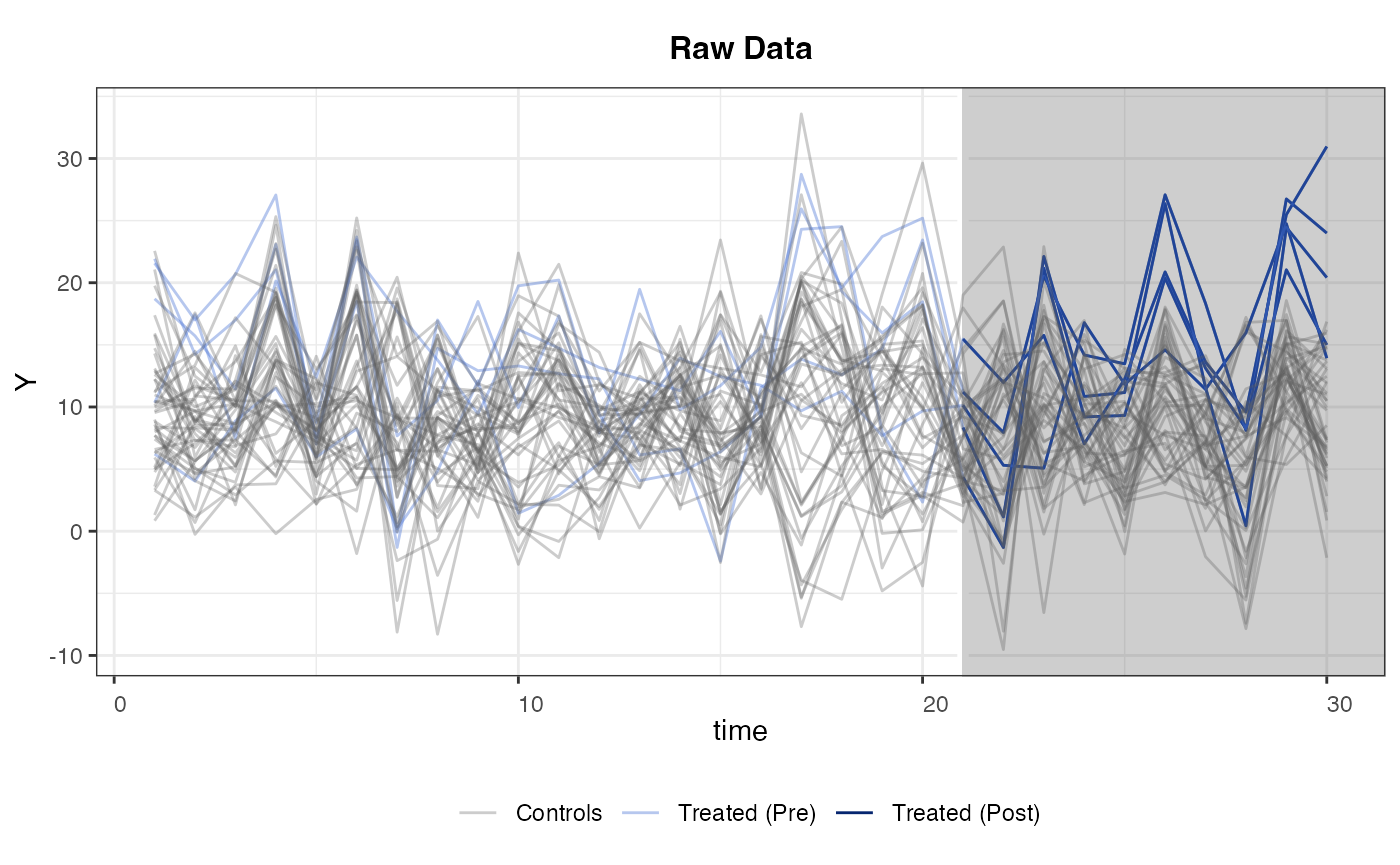

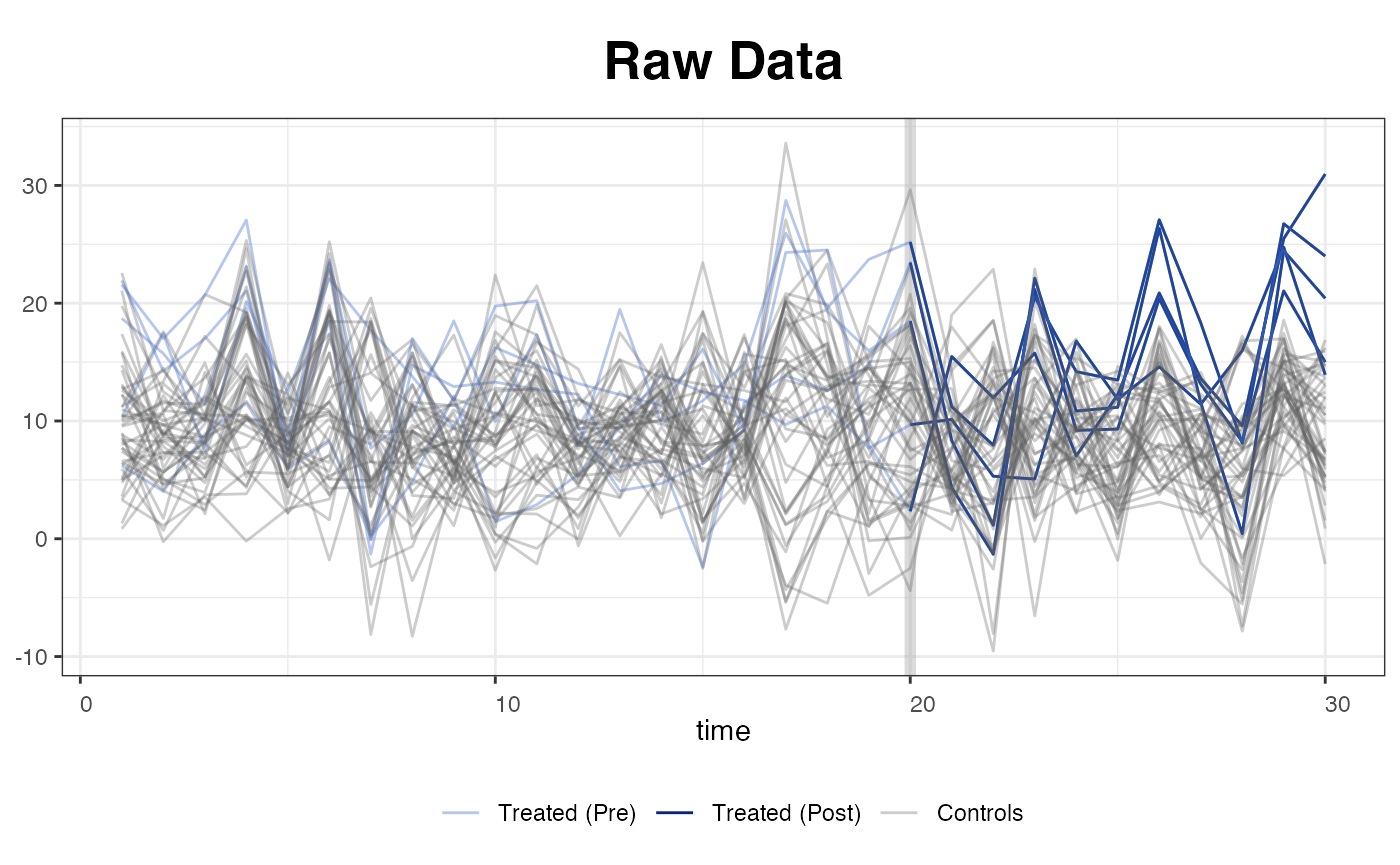

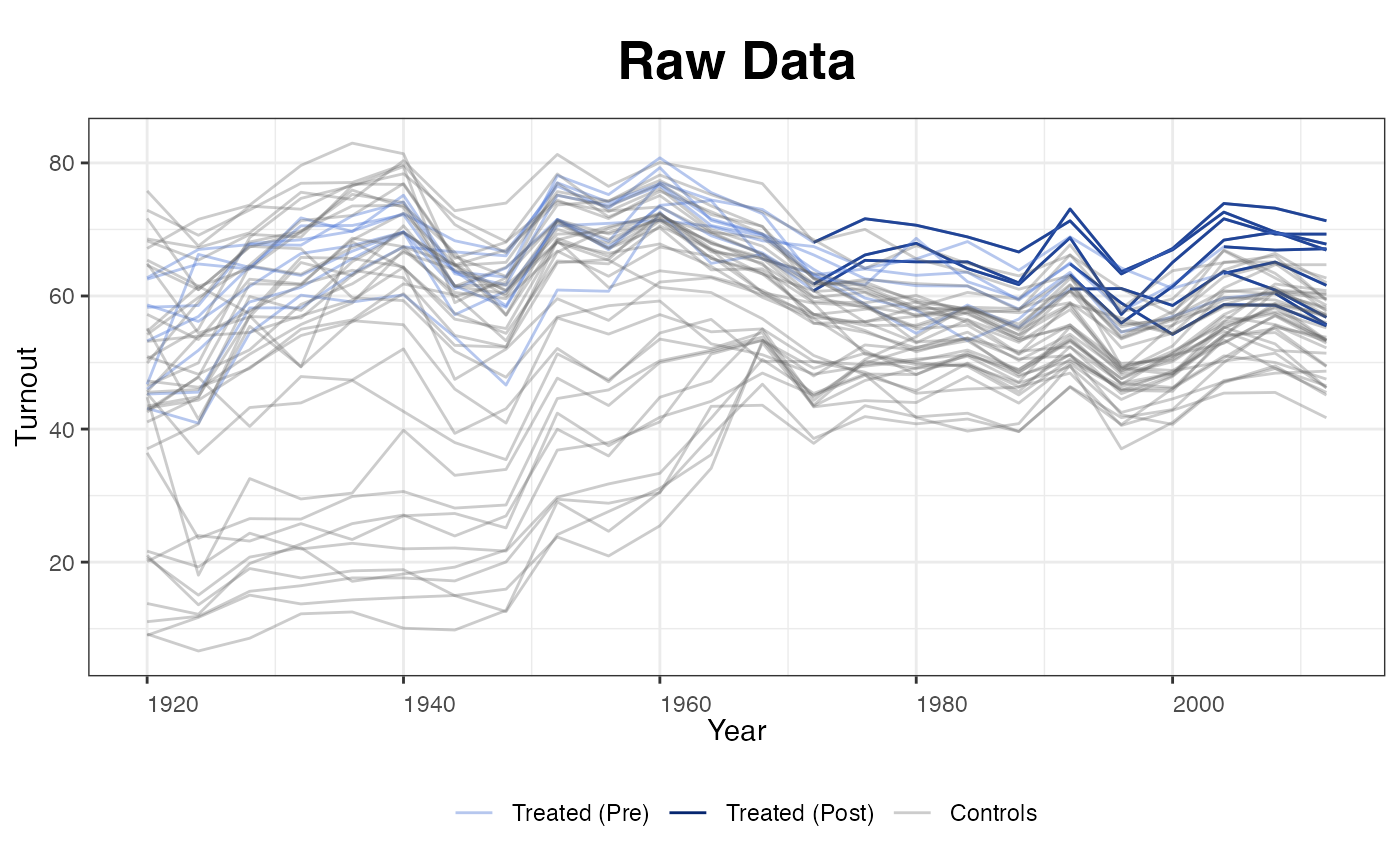

The other four type options include "raw", which plots the raw data (outcome) variable as panelview() does; "counterfactual" (or "ct" for short), which plots the estimated counterfactual(s); and "factors" and "loadings", which plot estimated factors and loadings, respectively. The next figure is a raw plot with a black-and-white theme. The treated (pre- and post-treatment) and control units are painted with different colors.

plot(out, type = "raw")

We can set the axes limits, remove the legend and title, for example, by typing (figure not shown):

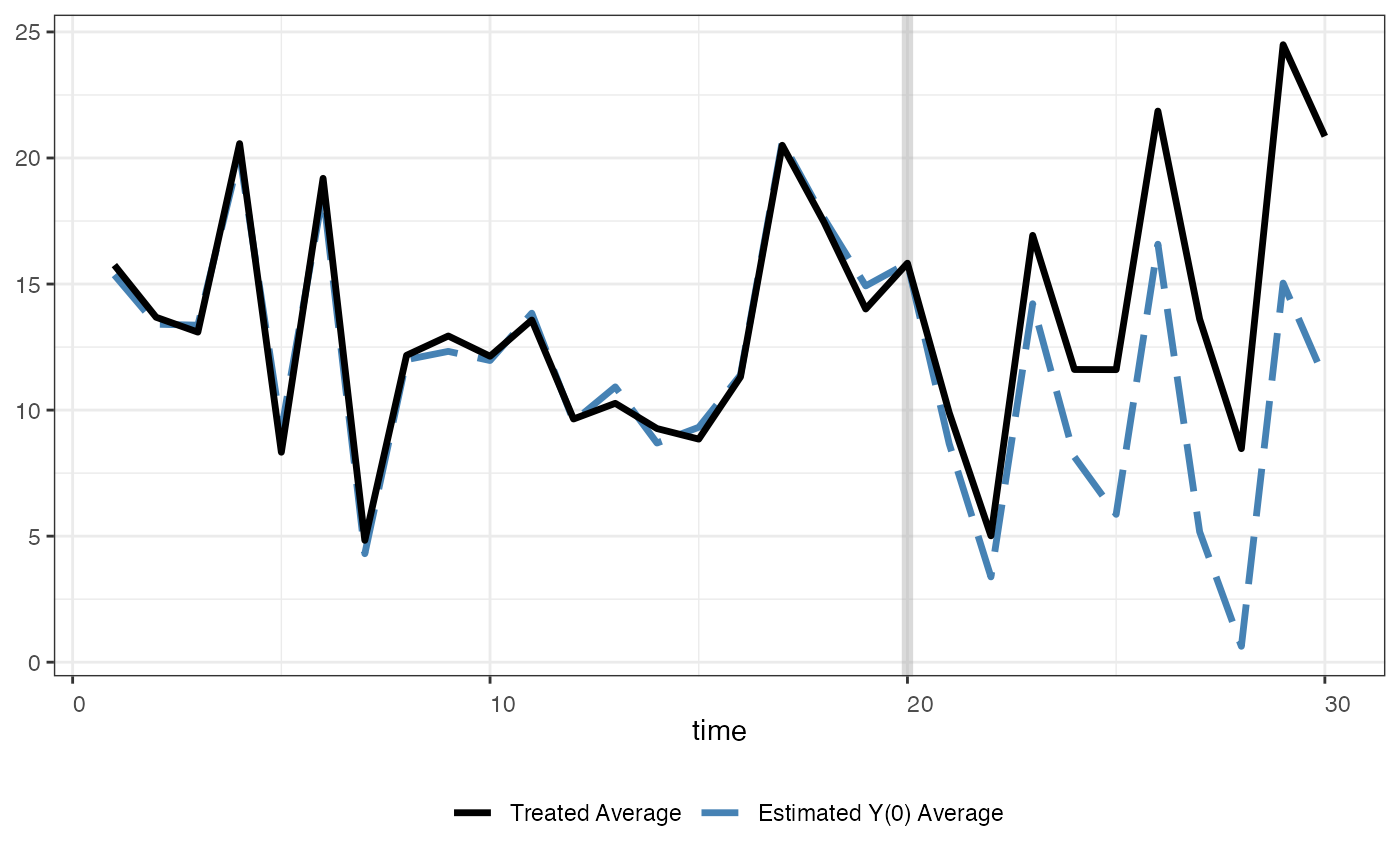

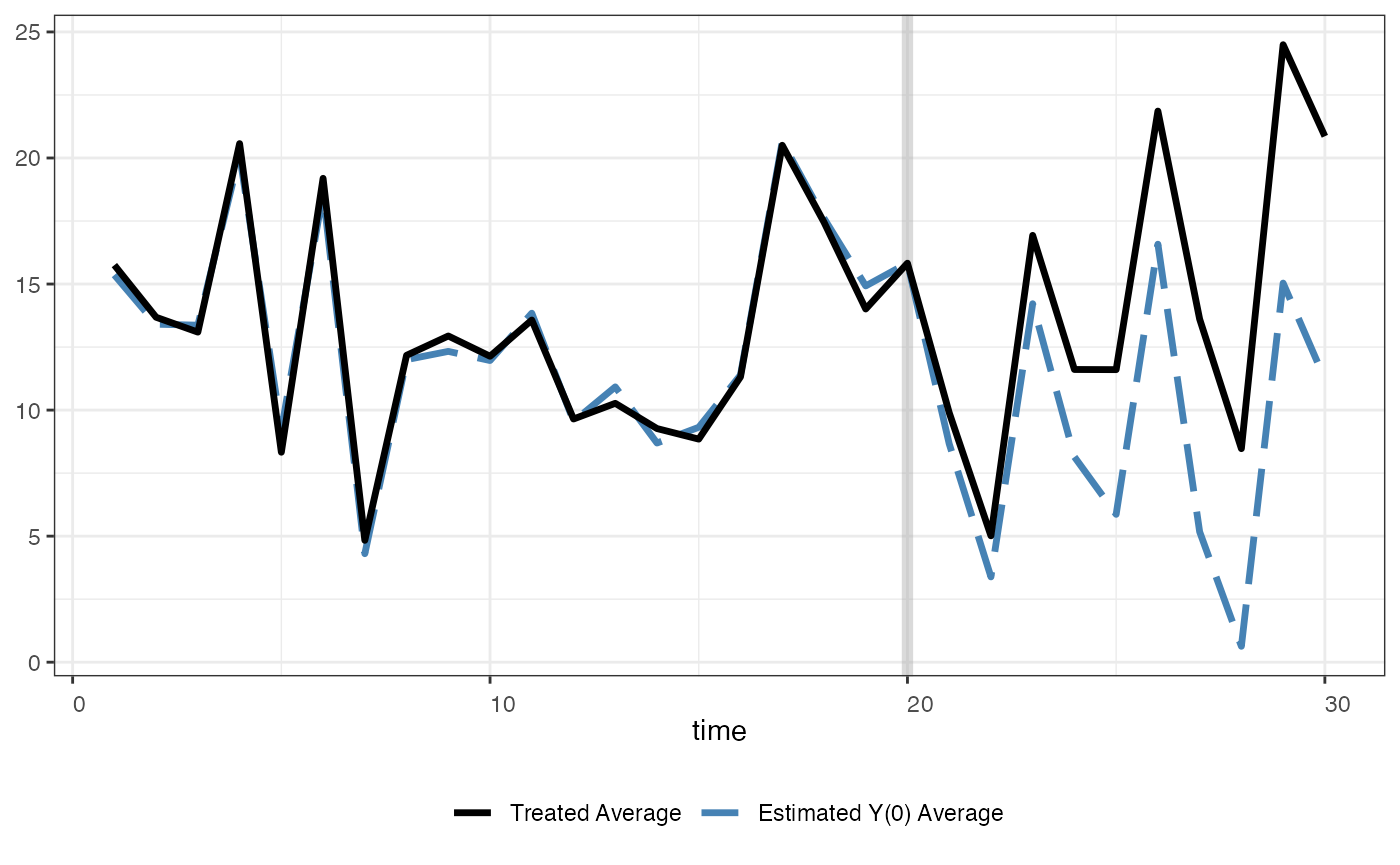

We use the following line of command to plot the estimated counterfactual. raw = "none" (the default option) means that we do not include the raw data in this figure:

plot(out, type = "counterfactual", raw = "none", main="")

One can use shade.post to control the shading in the post-treatment period

plot(out, type = "ct", raw = "none", main = "",

shade.post = FALSE)

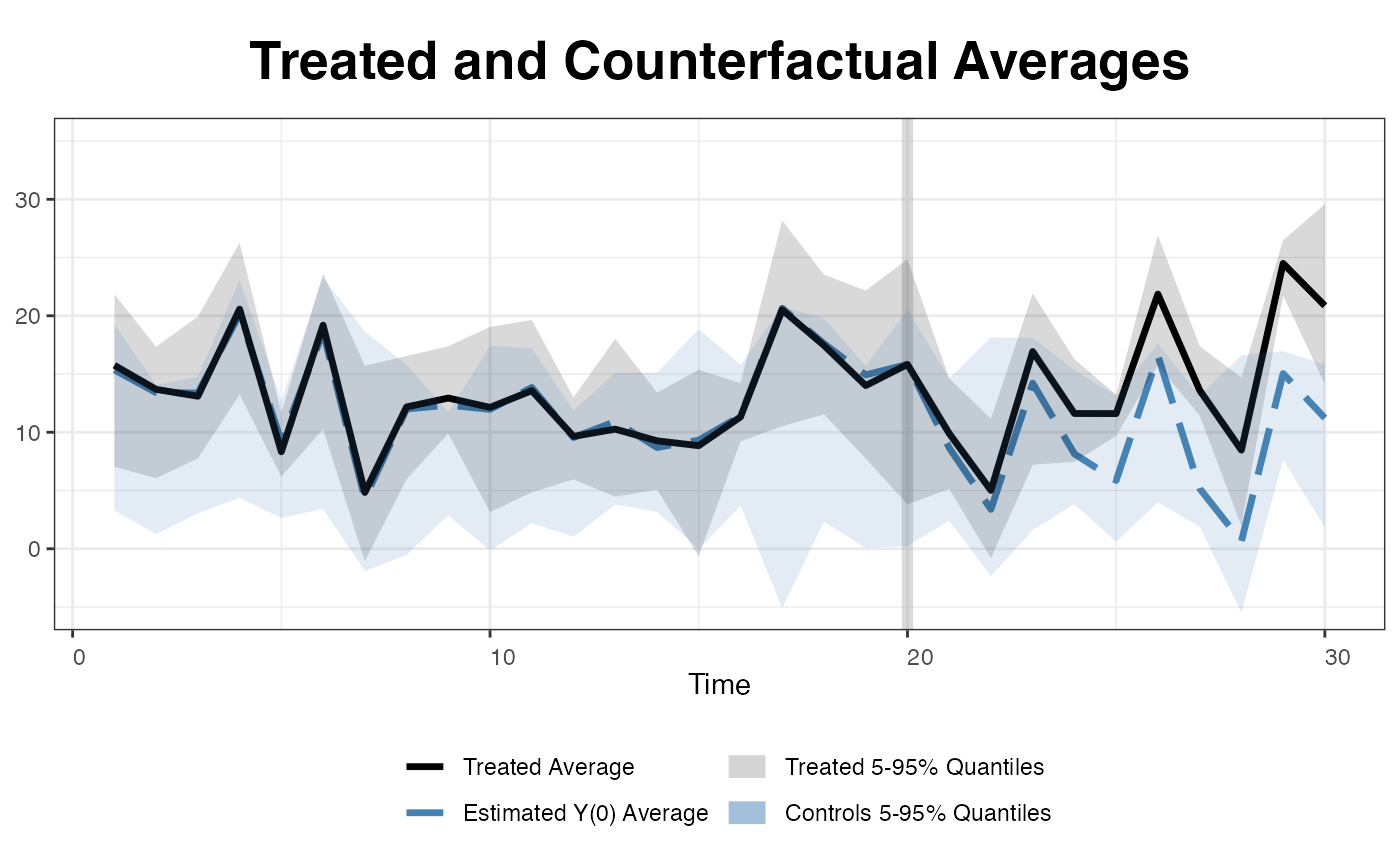

We can also add two 5 to 95% quantile bands of the treated and control outcomes as references to make sure the estimated counterfactuals are not results of severe extrapolations.

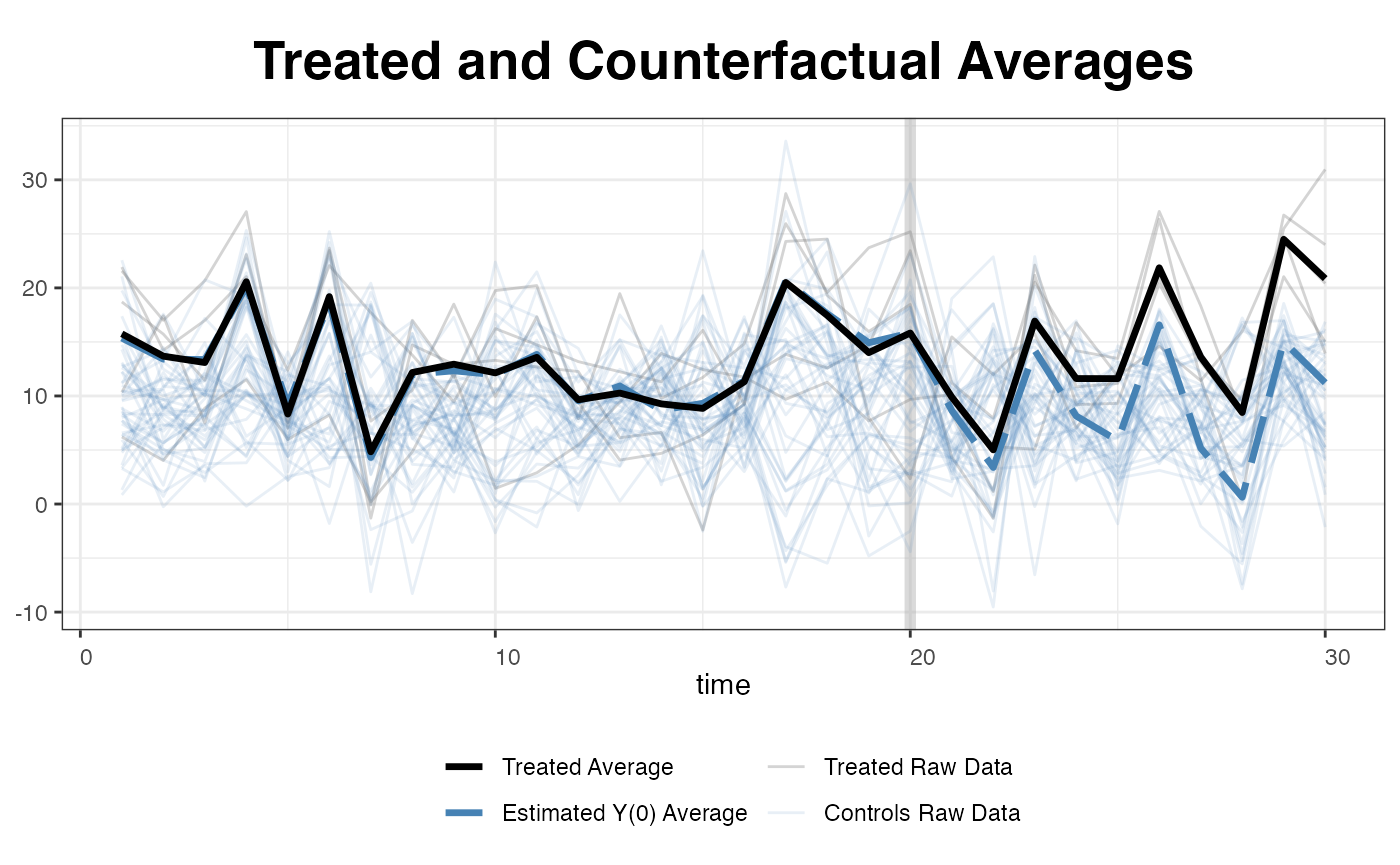

… or simply plot all the raw data (for the outcome variable).

plot(out, type = "counterfactual", raw = "all")

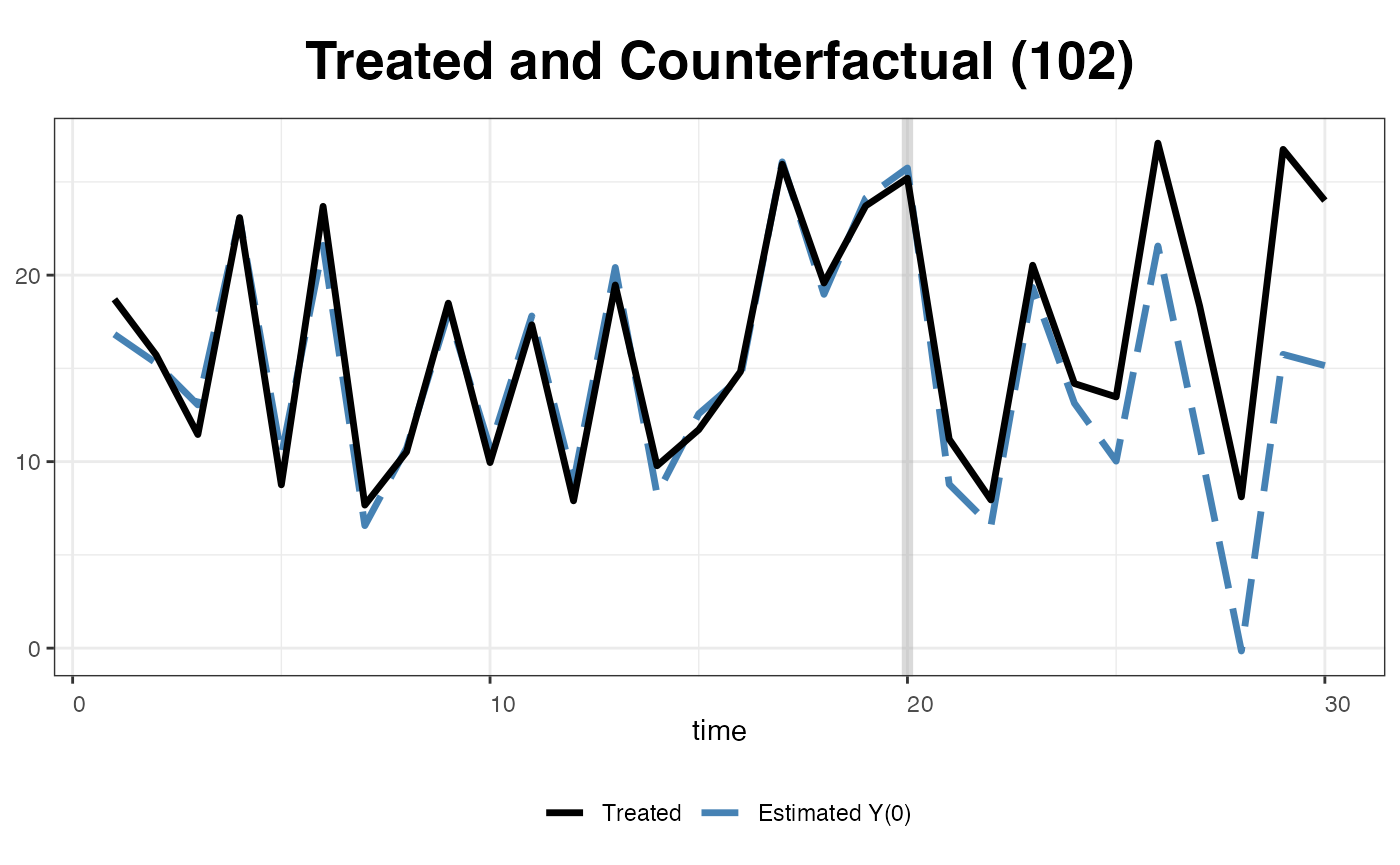

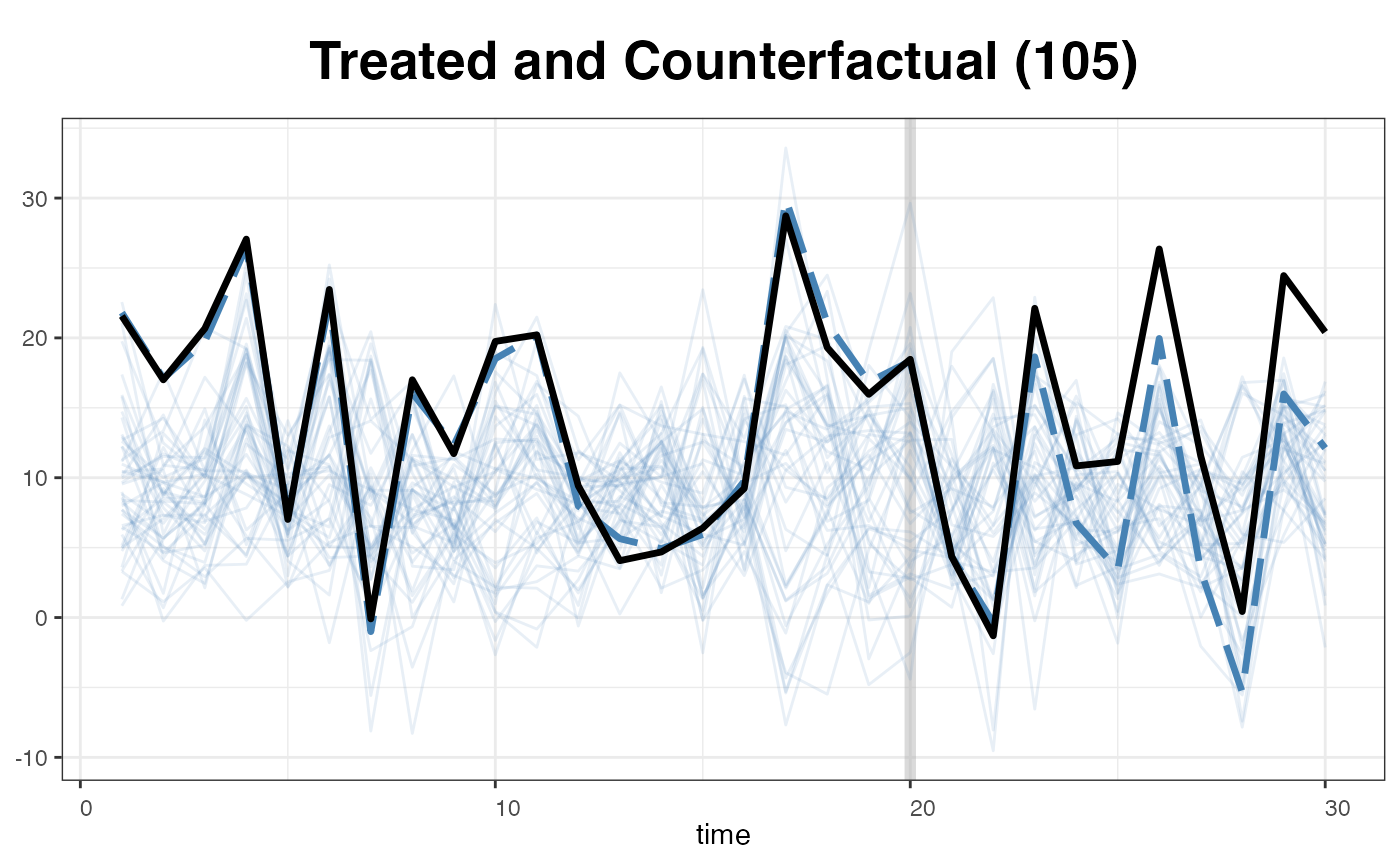

One can also visualize the estimated counterfactual for each treated unit to evaluate the quality of model fit.

plot(out, type = "counterfactual", id = 102)

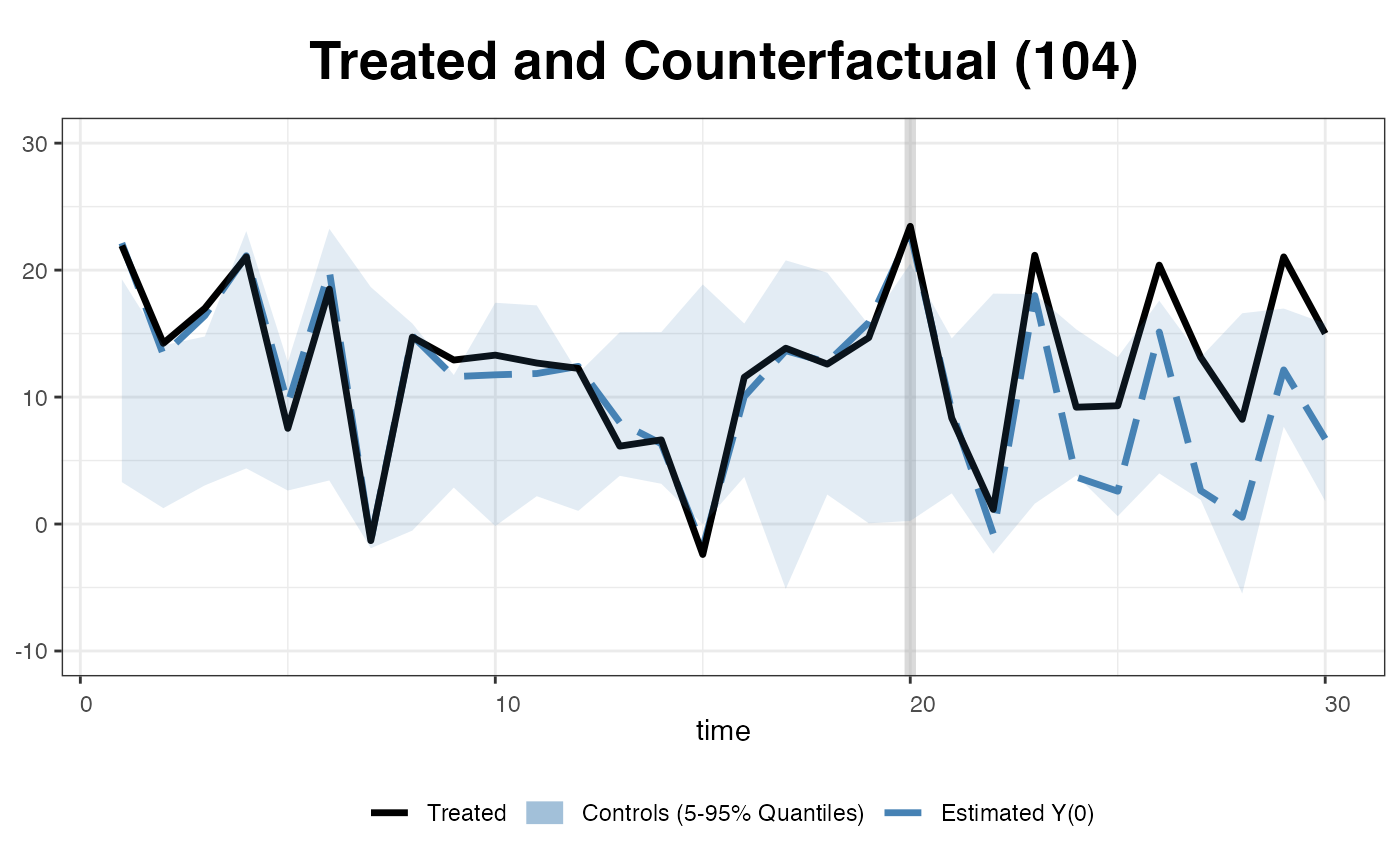

We can add the reference band, a 5 to 95% quantile band the control outcomes.

… or replaced the band with the raw data of the control outcomes.

plot(out, type = "counterfactual", id = 105,

raw = "all", legendOff = TRUE)

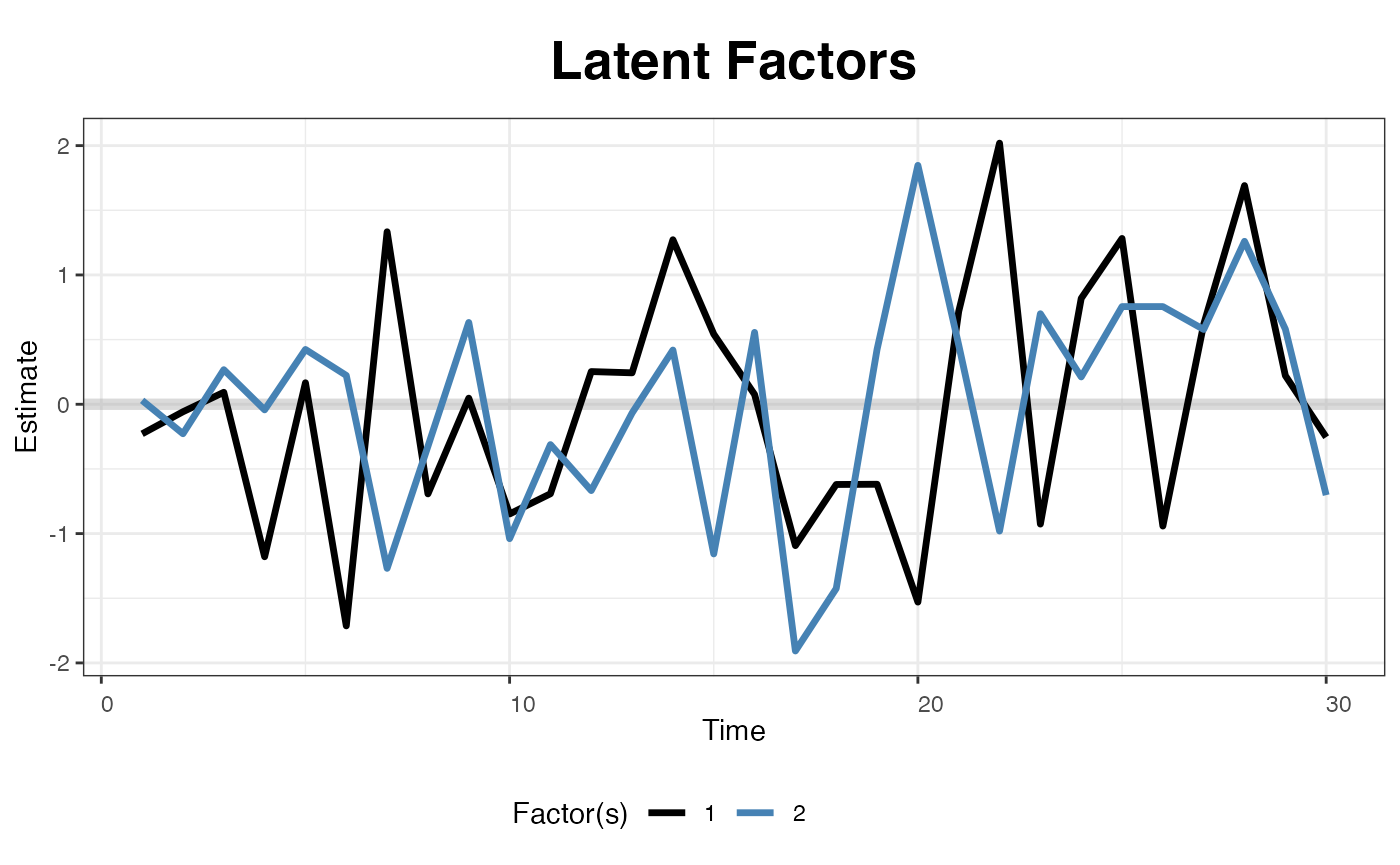

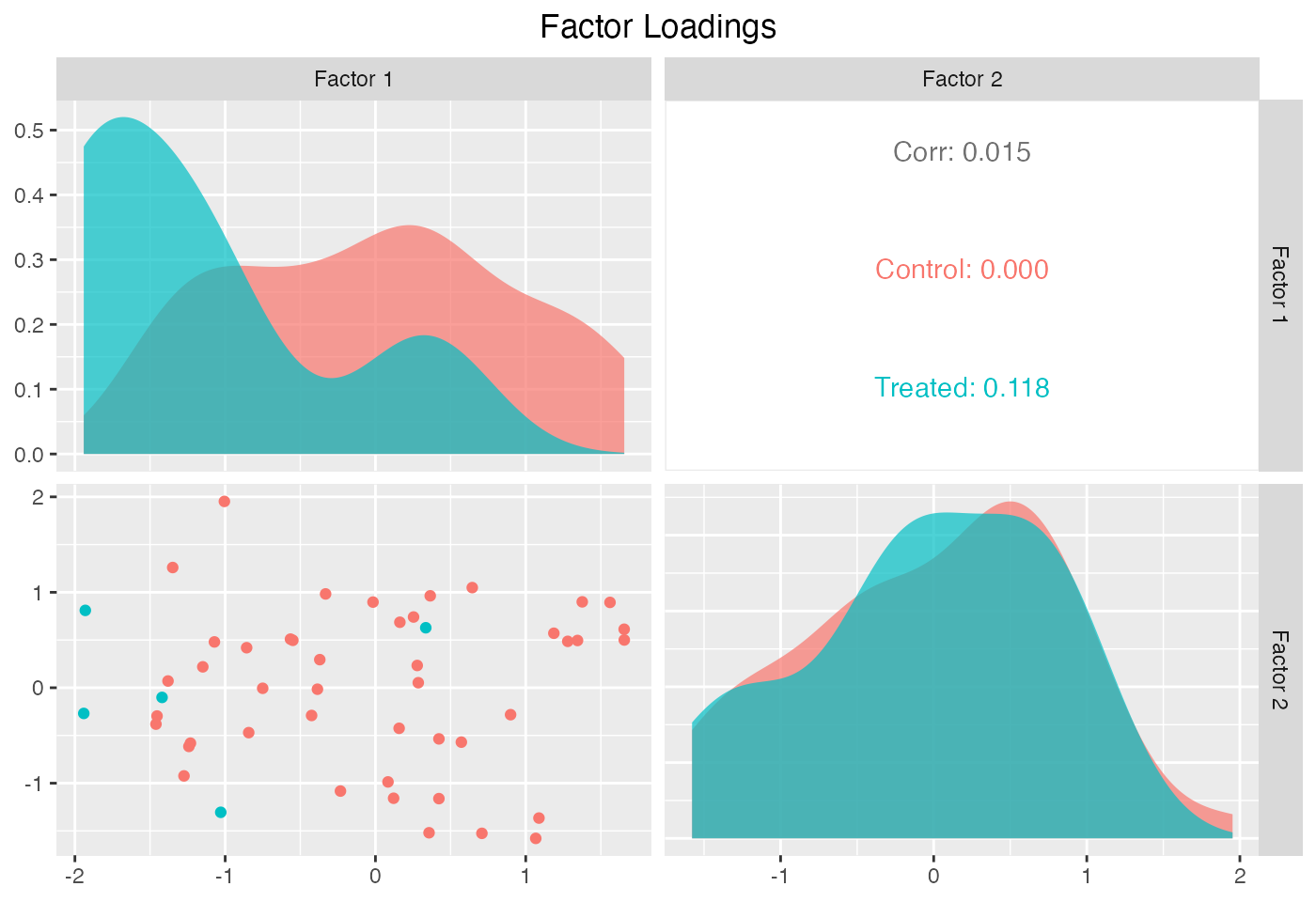

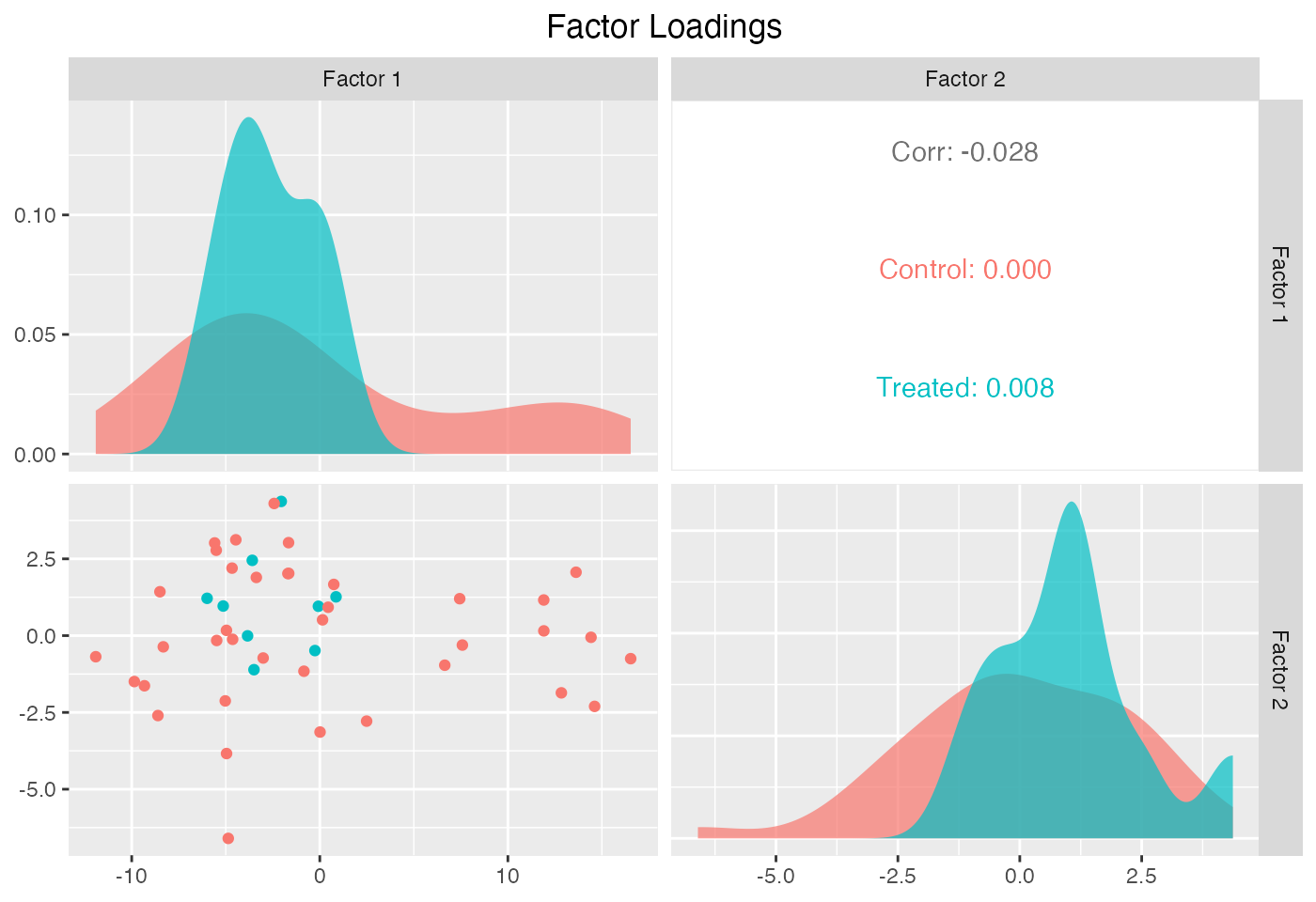

The next two figures plot the estimated factors and factor loadings, respectively.

plot(out, type = "factors", xlab = "Time")

plot(out, type = "loadings")

EM method

The EM algorithm proposed by Gobillon and Magnac (2016) takes advantage of the treatment group information in the pre-treatment period. We implement this method. The estimation takes more time, but the results are very similar to that from the original method – the coefficients will be slightly more precisely estimated.

system.time(

out <- gsynth(Y ~ D + X1 + X2, data = simdata,

index = c("id","time"), EM = TRUE,

force = "two-way", inference = "parametric",

se = TRUE, nboots = 500, r = c(0, 5),

CV = TRUE, parallel = TRUE, cores = 4)

)

#> Parallel computing ...

#> Cross-validating ...

#> r = 0; sigma2 = 1.87052; IC = 1.03286; PC = 1.76603; MSPE = 2.38012

#> r = 1; sigma2 = 1.50792; IC = 1.20393; PC = 1.90937; MSPE = 1.75038

#> r = 2; sigma2 = 0.99174; IC = 1.16142; PC = 1.57657; MSPE = 1.17430*

#> r = 3; sigma2 = 0.93513; IC = 1.46912; PC = 1.79035; MSPE = 1.24820

#> r = 4; sigma2 = 0.88552; IC = 1.77104; PC = 1.98424; MSPE = 1.43445

#> r = 5; sigma2 = 0.84141; IC = 2.06634; PC = 2.16106; MSPE = 1.61788

#>

#> r* = 2

#>

#>

Bootstrapping ...

#>

#> user system elapsed

#> 4.111 0.086 36.454

plot(out, main = "Estimated ATT (EM)")

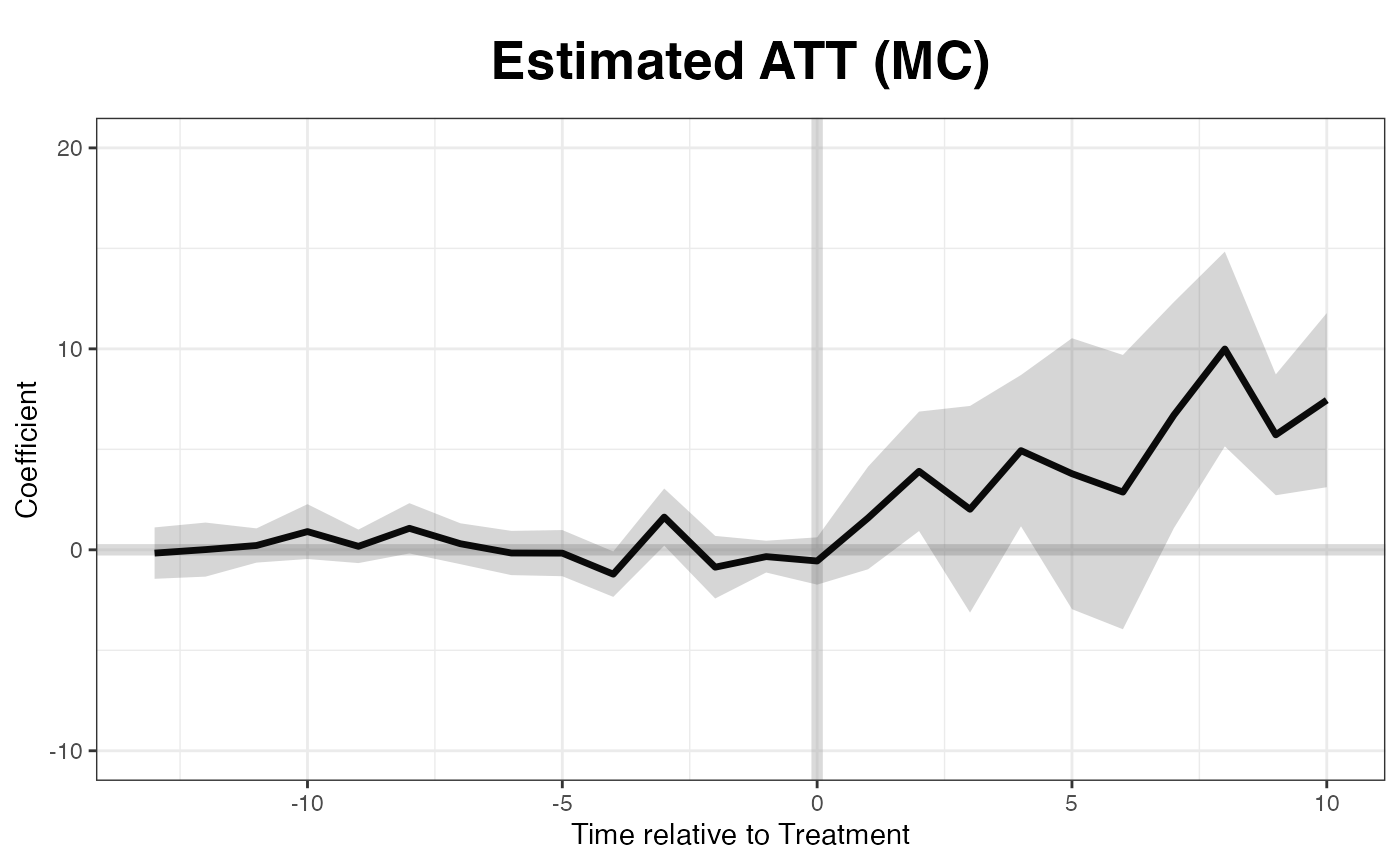

Matrix completion

In v1.0.9 or higher versions, we implement the matrix completion method proposed by Athey et al. (2021) by setting estimator = "mc" (the default is "ife", which represents the interactive fixed effects model). It uses a different regularization scheme and takes advantage of the treatment group information in the pre-treatment period. The option lambda controls the tuning parameter. If it is left blank, a sequence of candidate lambdas will be generated and tested sequentially. Users can set the length of candidate list by setting, for example, nlambda = 10. The option lambda can also receive a user-specified sequence as candidate lambdas. A built in cross-validation procedure will select the optimal hyper parameter when a single lambda is given. Users can specify the number of folds in cross-validation using the option k (e.g. k = 5).

system.time(

out <- gsynth(Y ~ D + X1 + X2, data = simdata,

estimator = "mc", index = c("id","time"),

se = TRUE, nboots = 500, r = c(0, 5),

CV = TRUE, force = "two-way",

parallel = TRUE, cores = 4,

inference = "nonparametric")

)

#> Parallel computing ...

#> Cross-validating ...

#> lambda.norm = 1.00000; MSPE = 2.11398

#> lambda.norm = 0.42170; MSPE = 1.58195*

#> lambda.norm = 0.17783; MSPE = 1.76898

#> lambda.norm = 0.07499; MSPE = 1.94975

#> lambda.norm = 0.03162; MSPE = 2.05700

#> lambda.norm = 0.01334; MSPE = 2.08996

#> lambda.norm = 0.00562; MSPE = 2.10347

#> lambda.norm = 0.00237; MSPE = 2.10948

#> lambda.norm = 0.00100; MSPE = 2.11207

#> lambda.norm = 0.00000; MSPE = 2.11398

#>

#> lambda.norm* = 0.4216965

#>

#>

Bootstrapping ...

#>

#> user system elapsed

#> 0.617 0.059 19.337

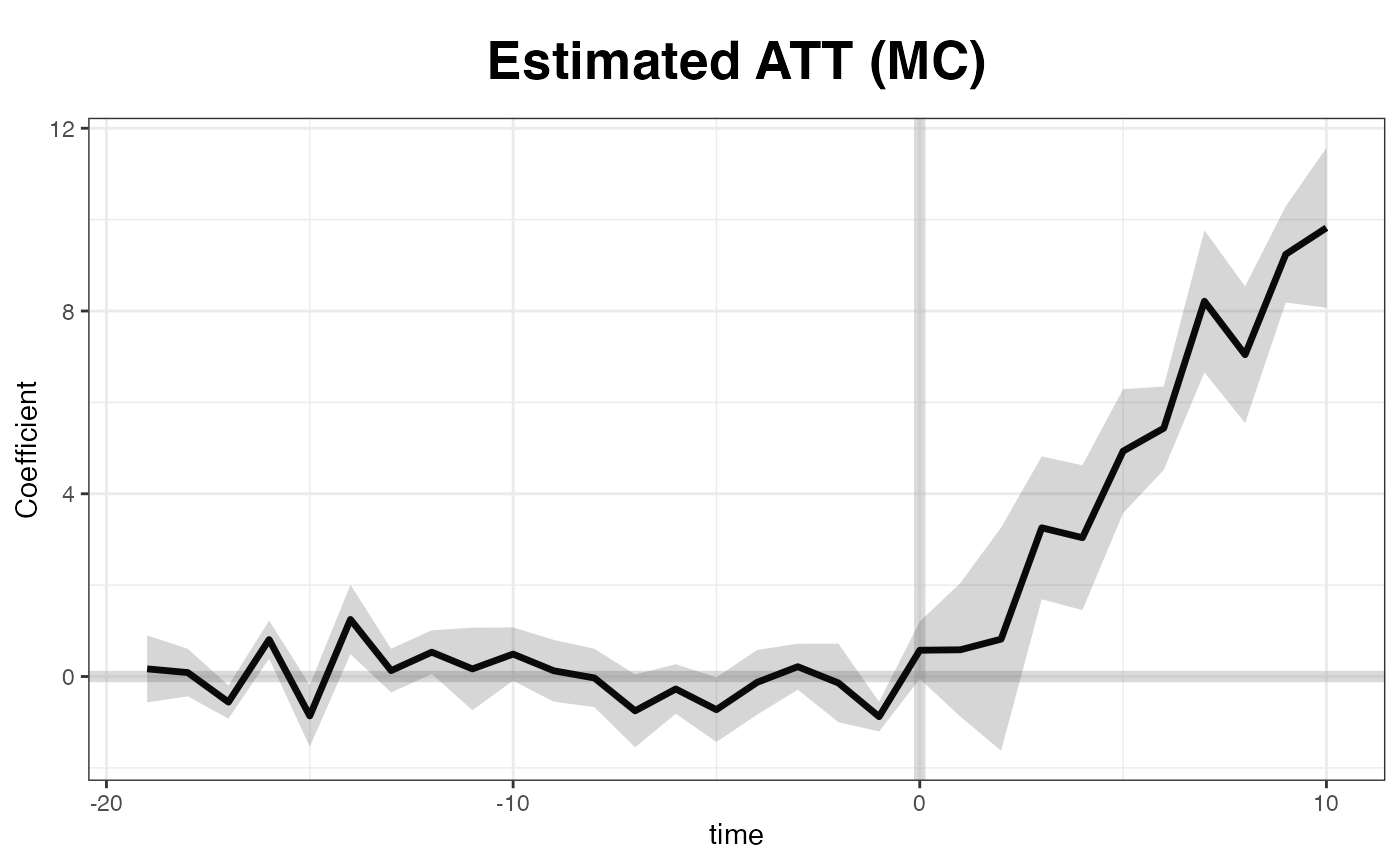

plot(out, main = "Estimated ATT (MC)")

Staggered DID

The second example investigates the effect of Election-Day Registration (EDR) reforms on voter turnout in the United States. Note that the treatment kicks in at different times.

data(gsynth)

names(turnout)

#> [1] "abb" "year" "turnout" "policy_edr"

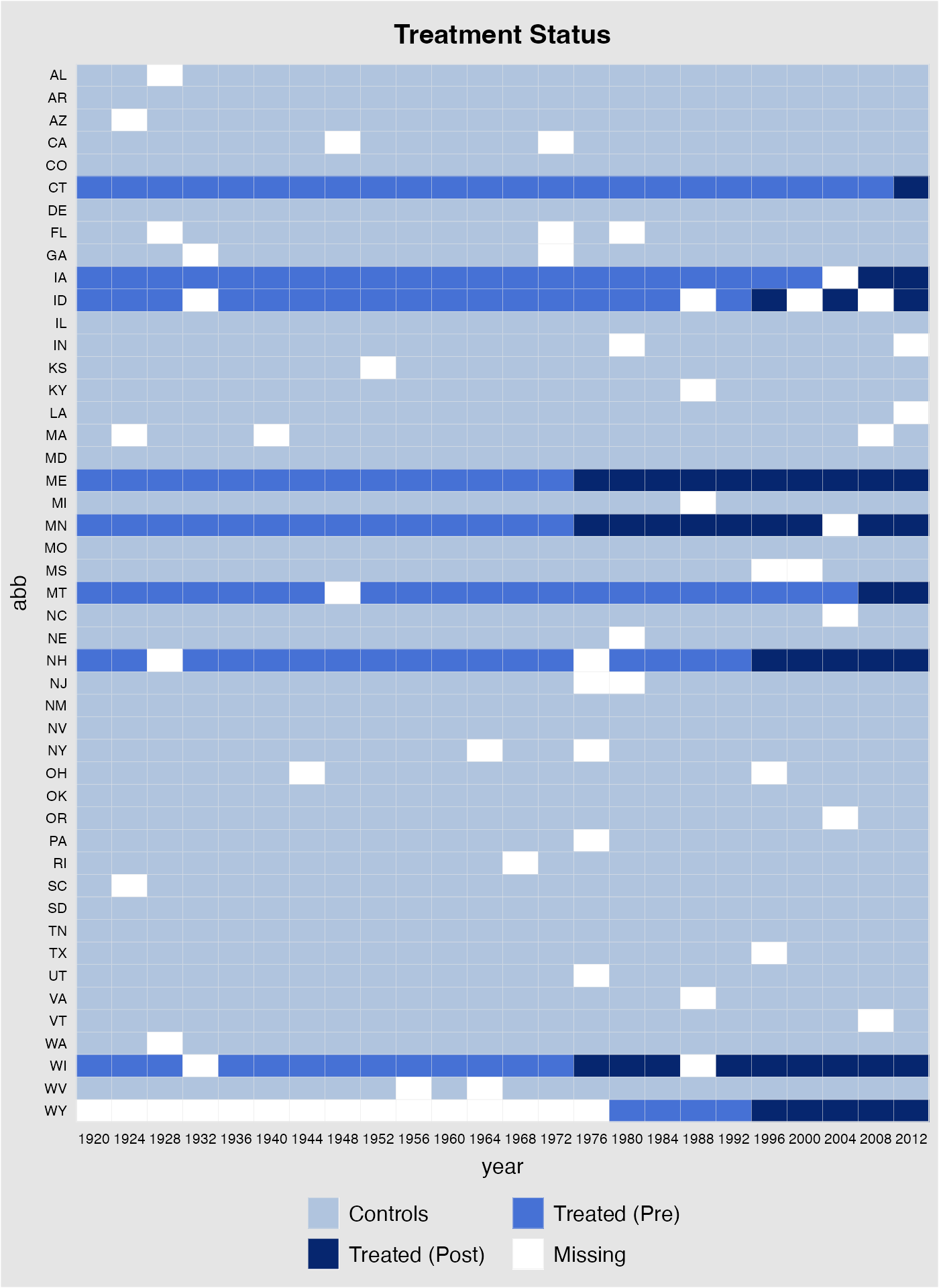

#> [5] "policy_mail_in" "policy_motor"Data structure

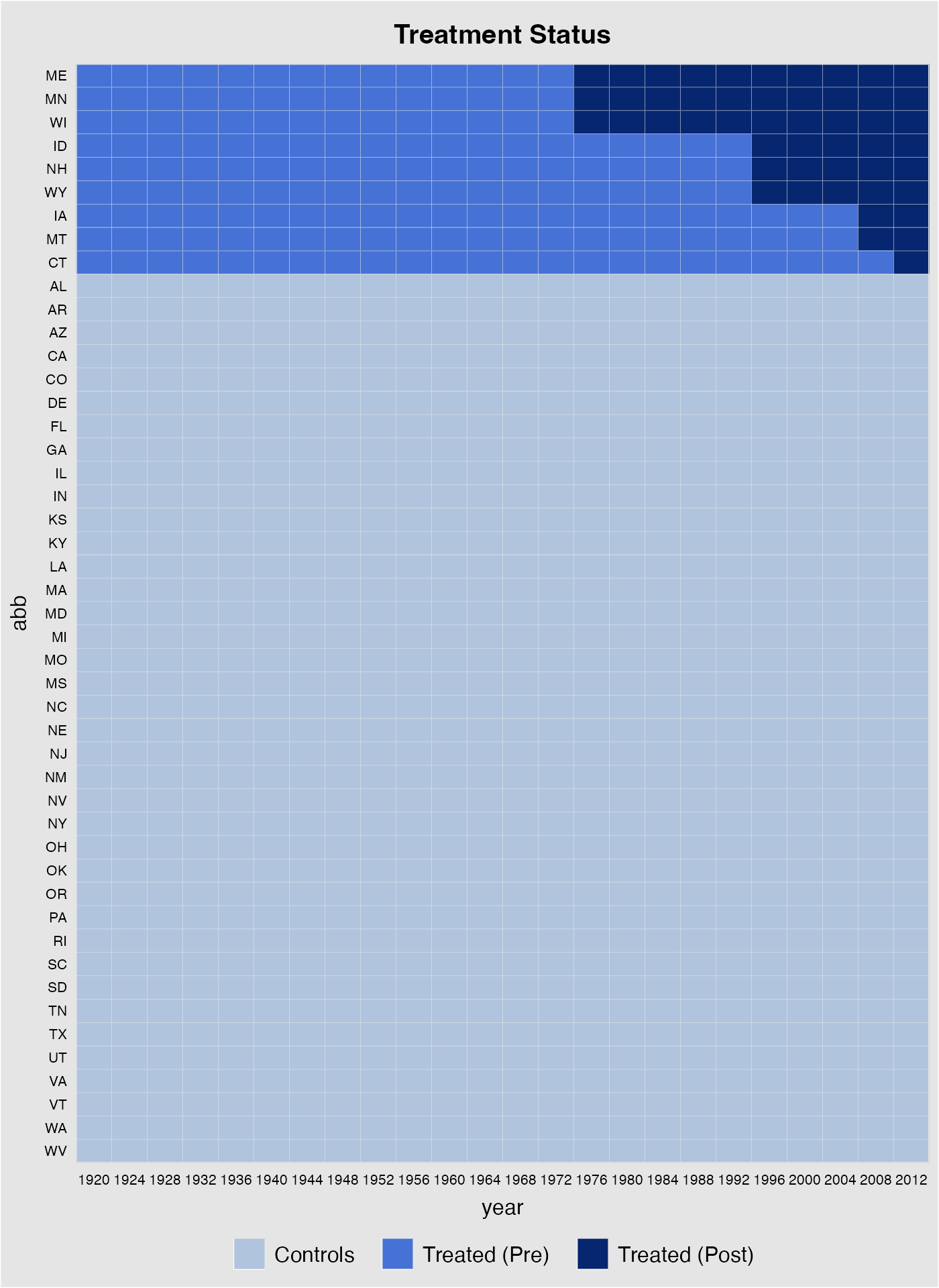

First, we take a look at the data structure. The following figure shows that (1) we have a balanced panel with 9 treated units and (2) the treatment starts at different time periods.

panelview(turnout ~ policy_edr, data = turnout,

index = c("abb","year"), pre.post = TRUE,

by.timing = TRUE)

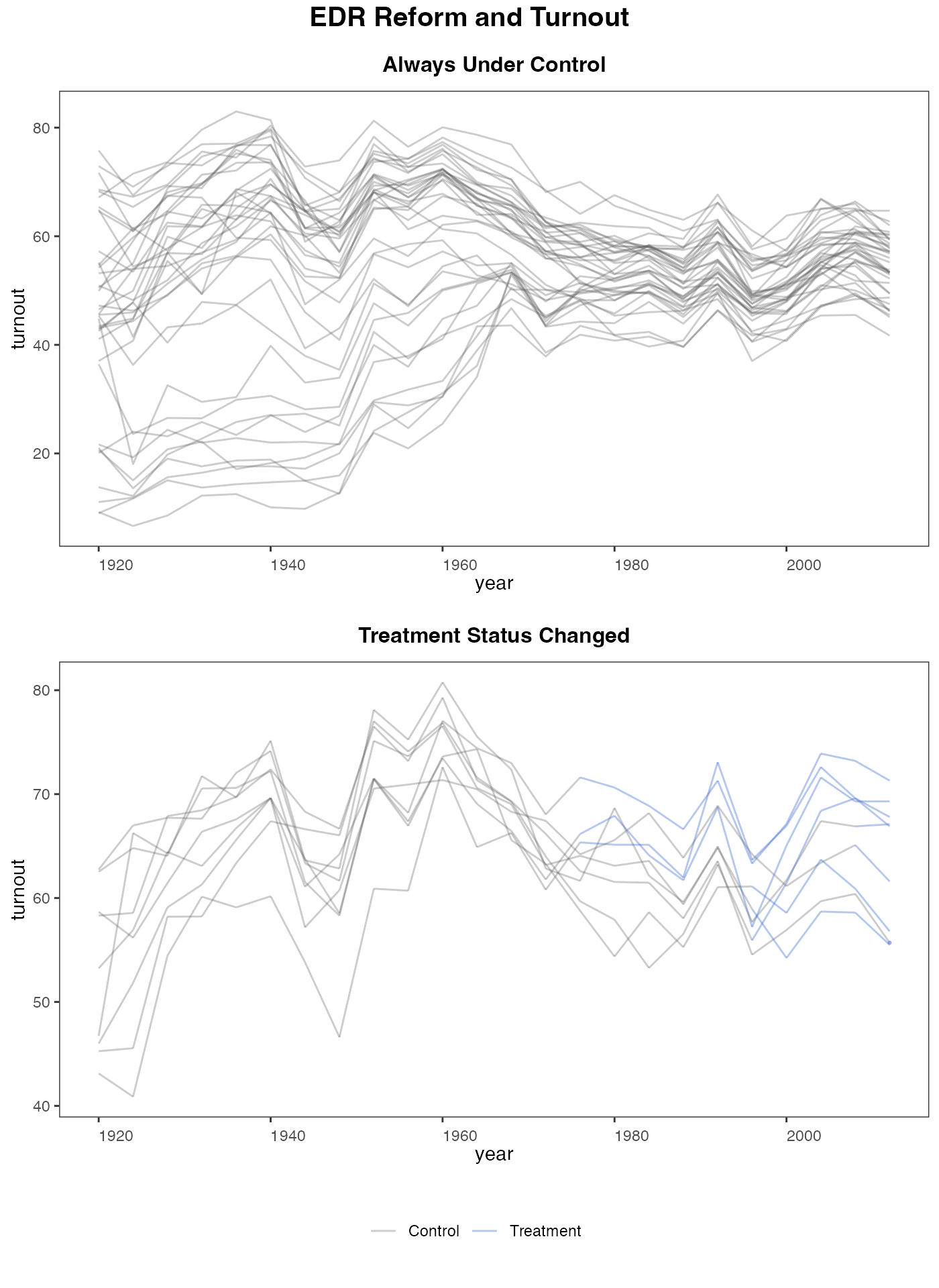

panelview can also visualize the outcome variable by group (change in treatment status)

panelview(turnout ~ policy_edr, data = turnout,

index = c("abb","year"), type = "outcome",

main = "EDR Reform and Turnout",

by.group = TRUE)

Estimation w/o factors

When no factor is assumed, the estimates are close to what we obtain from difference-in-differences:

out0 <- gsynth(turnout ~ policy_edr + policy_mail_in + policy_motor,

data = turnout, index = c("abb","year"),

se = TRUE, inference = "parametric",

r = 0, CV = FALSE, force = "two-way",

nboots = 1000, seed = 02139)

#> Parallel computing ...

#>

Simulating errors ...

Bootstrapping ...

#>

Estimation w/ factors

Now we allow the algorithm to find the “correct” number of factors that predicts the pre-treatment data the best:

out <- gsynth(turnout ~ policy_edr + policy_mail_in + policy_motor,

data = turnout, index = c("abb","year"),

se = TRUE, inference = "parametric",

r = c(0, 5), CV = TRUE, force = "two-way",

nboots = 1000, seed = 02139)

#> Parallel computing ...

#> Cross-validating ...

#> r = 0; sigma2 = 77.99366; IC = 4.82744; PC = 72.60594; MSPE = 22.13889

#> r = 1; sigma2 = 13.65239; IC = 3.52566; PC = 21.67581; MSPE = 12.03686

#> r = 2; sigma2 = 8.56312; IC = 3.48518; PC = 19.23841; MSPE = 10.31254*

#> r = 3; sigma2 = 6.40268; IC = 3.60547; PC = 18.61783; MSPE = 11.48390

#> r = 4; sigma2 = 4.74273; IC = 3.70145; PC = 16.93707; MSPE = 16.28613

#> r = 5; sigma2 = 4.02488; IC = 3.91847; PC = 17.05226; MSPE = 15.78683

#>

#> r* = 2

#>

#>

Simulating errors ...

Bootstrapping ...

#> Implied weights

out$wgt.implied (\(N_{co}\times N_{tr}\)) stores the implied weights of all control units for each treated unit. Different from the synthetic control method, the weights can be both positive and negative. Below we show the control unit weights for Wisconsin.

dim(out$wgt.implied)

#> [1] 38 9

sort(out$wgt.implied[,8])

#> AL VA MS KY SC NC

#> -0.420446734 -0.359404870 -0.358899140 -0.323621805 -0.275363471 -0.222140041

#> GA MD FL TN AR NM

#> -0.214909889 -0.197804828 -0.185884567 -0.162290117 -0.151519318 -0.148094608

#> LA TX AZ MO DE OH

#> -0.127000746 -0.064591440 -0.057043363 -0.038932843 -0.001453018 0.010929464

#> OR VT NV CO MI IN

#> 0.029620626 0.049794411 0.073077120 0.079864605 0.090892631 0.091876103

#> KS NE IL OK WA WV

#> 0.093457125 0.104411283 0.133990028 0.158671615 0.159121640 0.181418421

#> SD PA NJ UT CA RI

#> 0.182877791 0.221808796 0.226279566 0.250193486 0.278783695 0.280519519

#> NY MA

#> 0.295315162 0.316497707Plot results

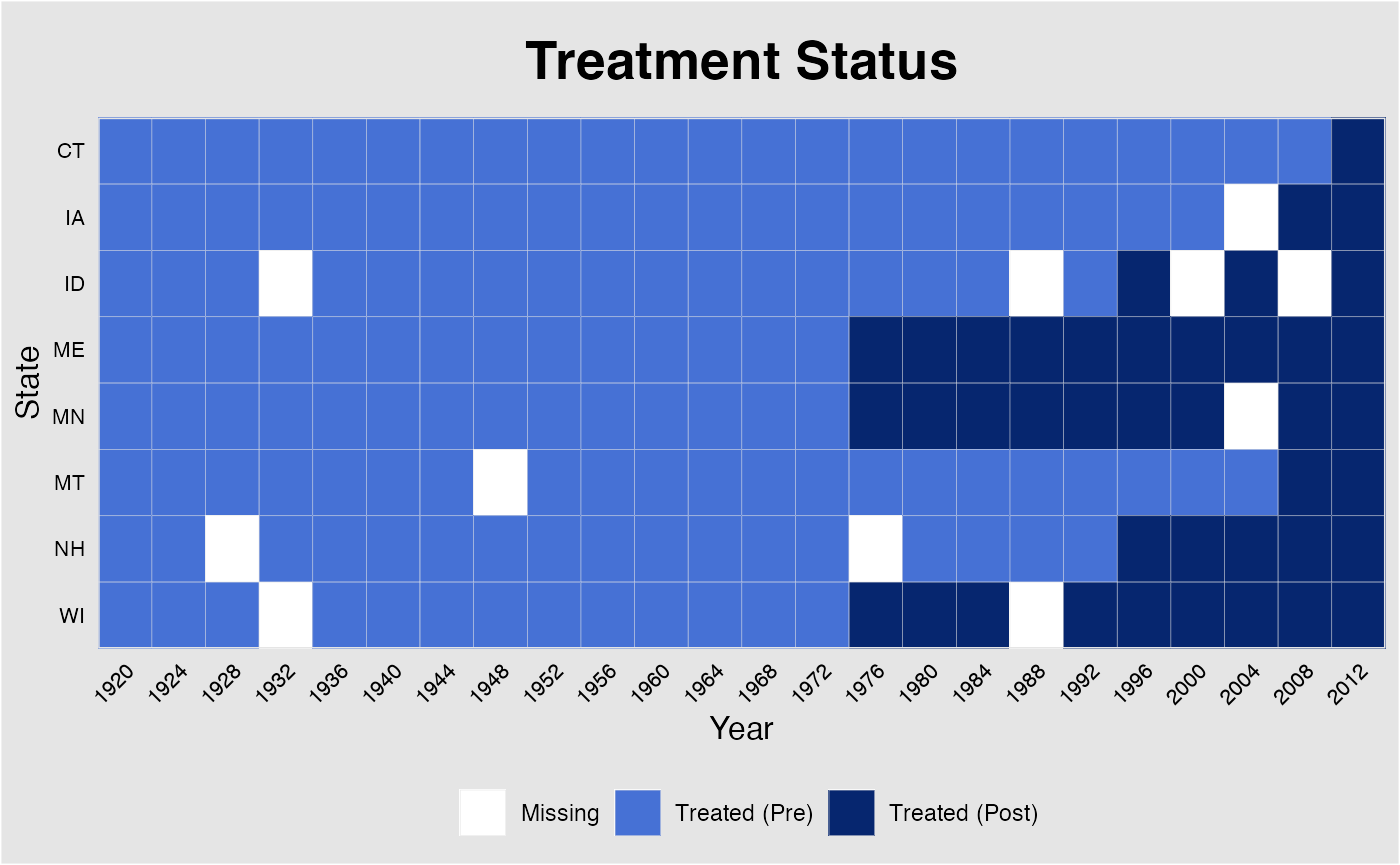

The missing data plot can also be produced after the estimation is performed. In addition, plot allows users to changes axes labels and the graph title (figure not shown).

plot(out, type = "missing", main = "Treatment Status",

xlab = "Year", ylab = "State")The following line of code produces the “gap” plot:

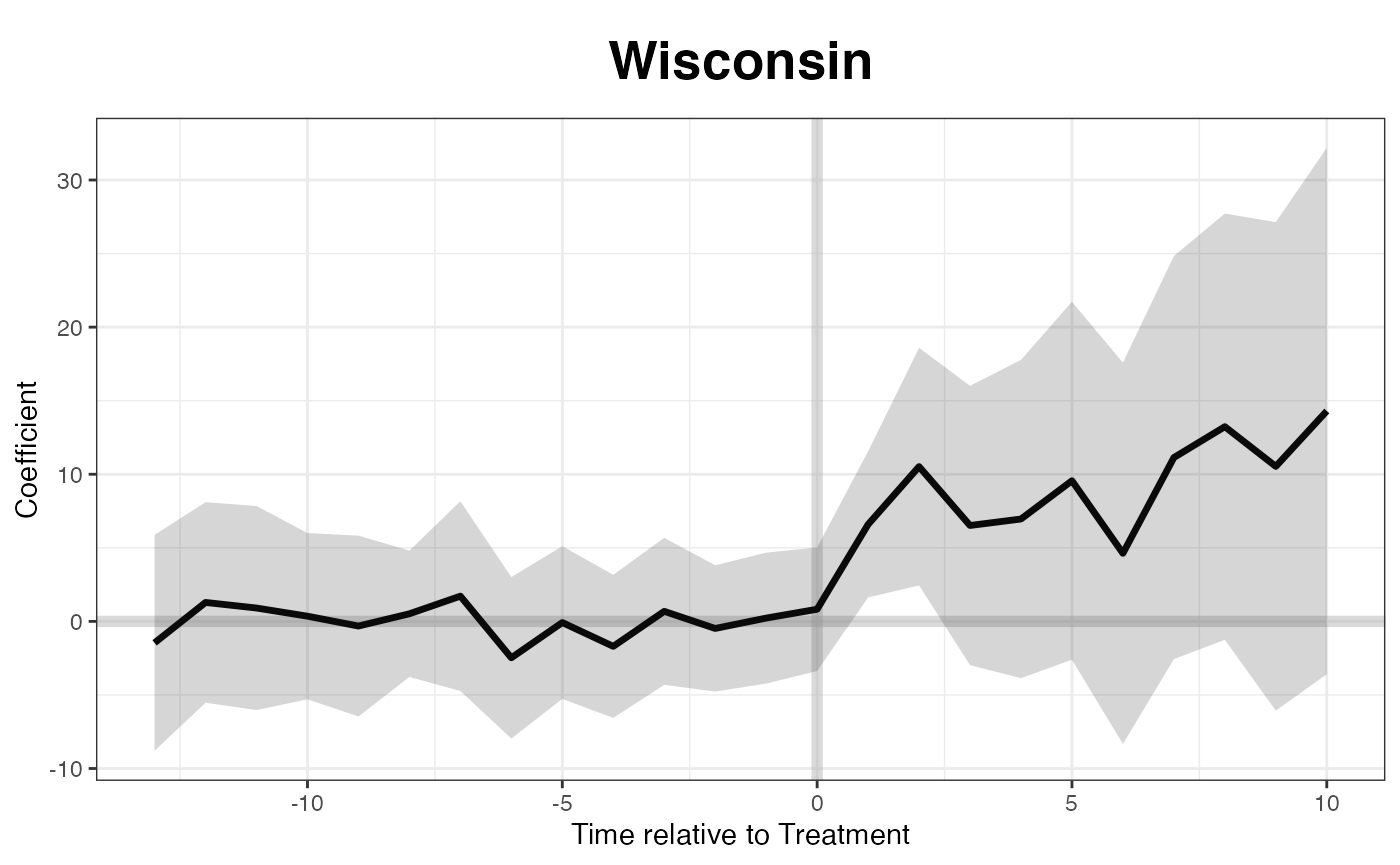

If we want to know the treatment effect on a specific state, such as Wisconsin, we can specify the id option:

plot(out, type = "gap", id = "WI", main = "Wisconsin")

Plotting the raw turnout data:

plot(out, type = "raw", xlab = "Year", ylab = "Turnout")

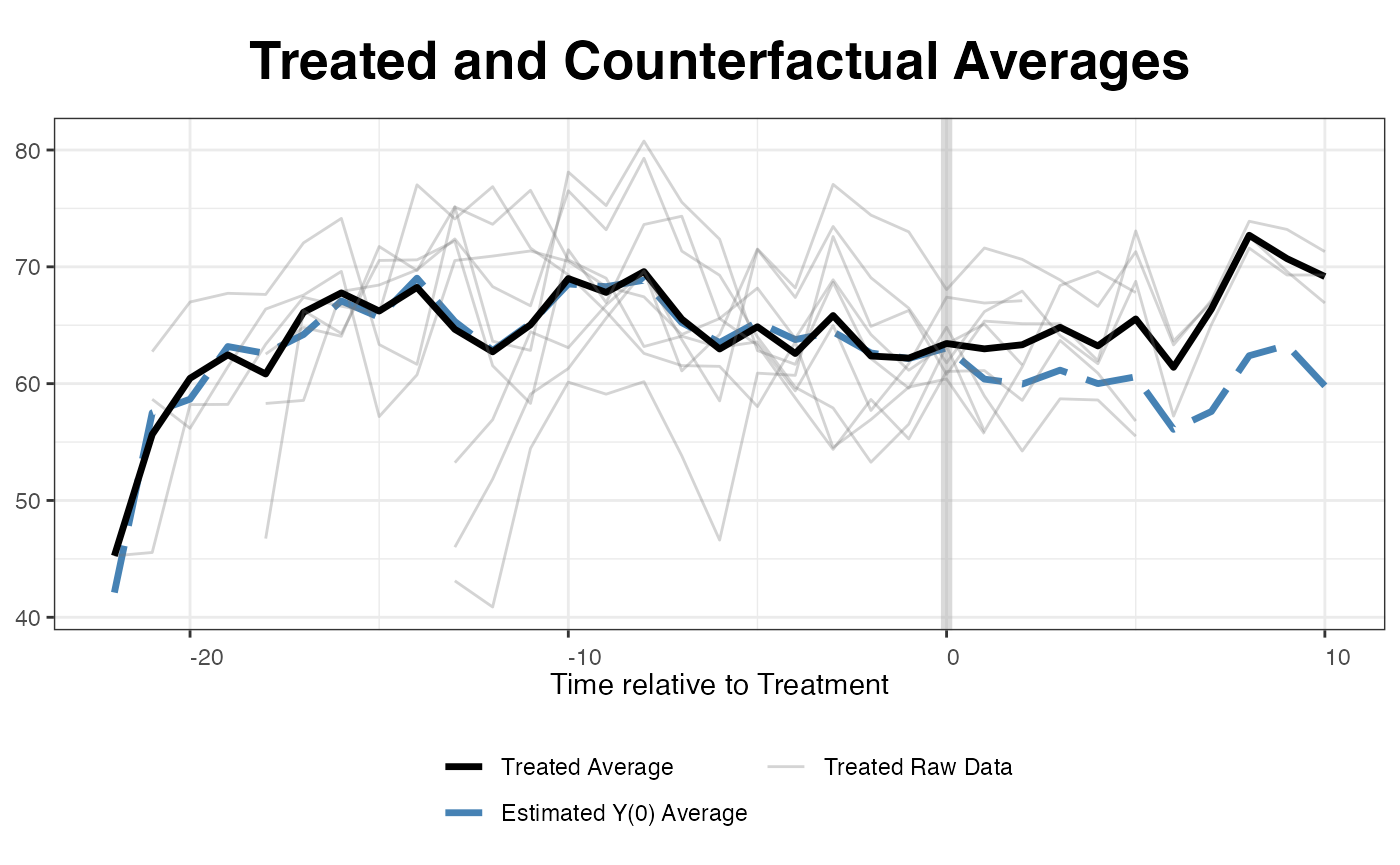

Plotting the estimated average counterfactual. Since EDR reforms kick in at different times, the algorithm realign the x-axis based on the timing of the treatment. raw = all shows the realigned outcomes of the treated units.

plot(out, type = "counterfactual", raw = "all")

We can plot estimated counterfactuals for each treated unit:

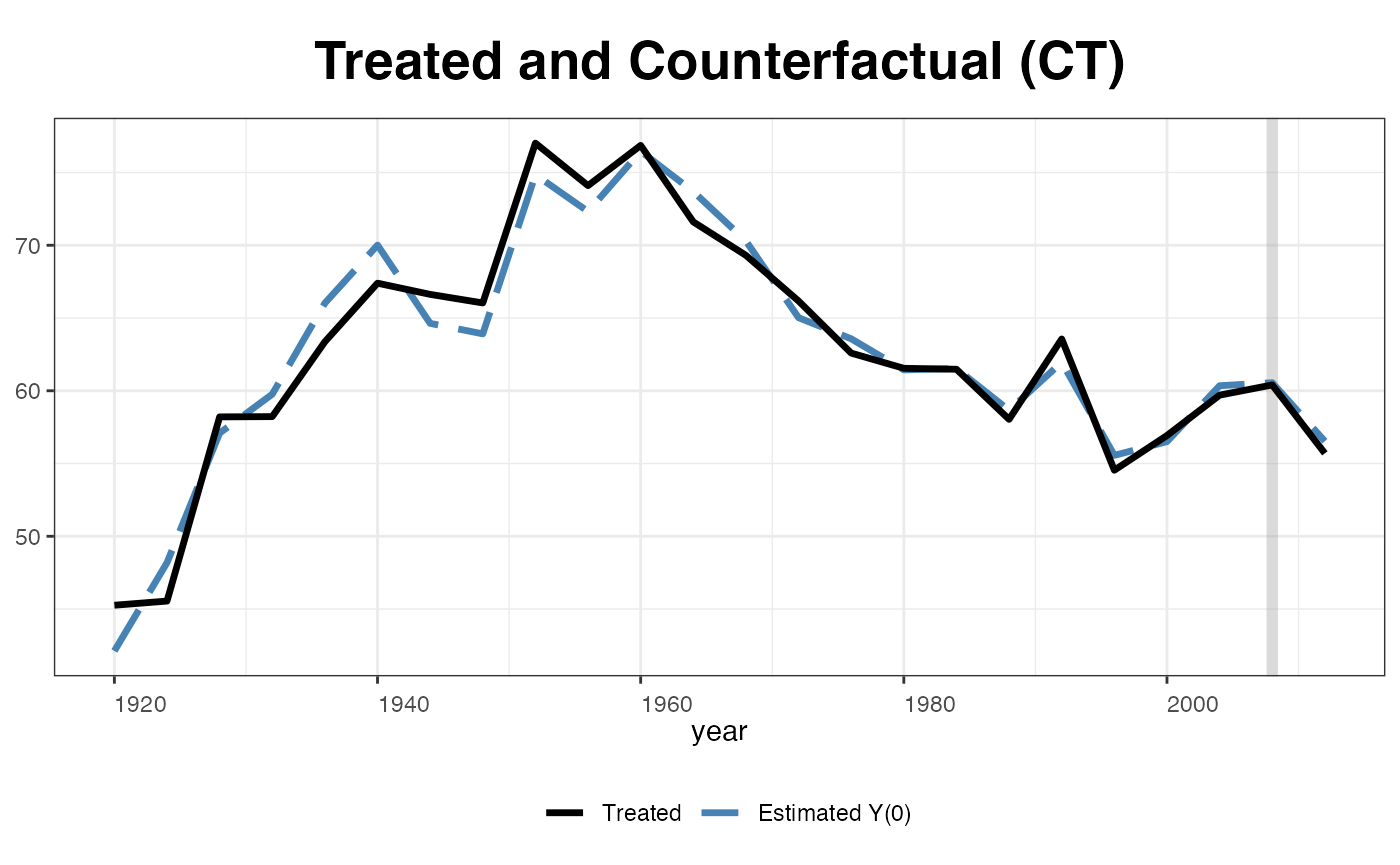

plot(out, type="counterfactual", id = "CT")

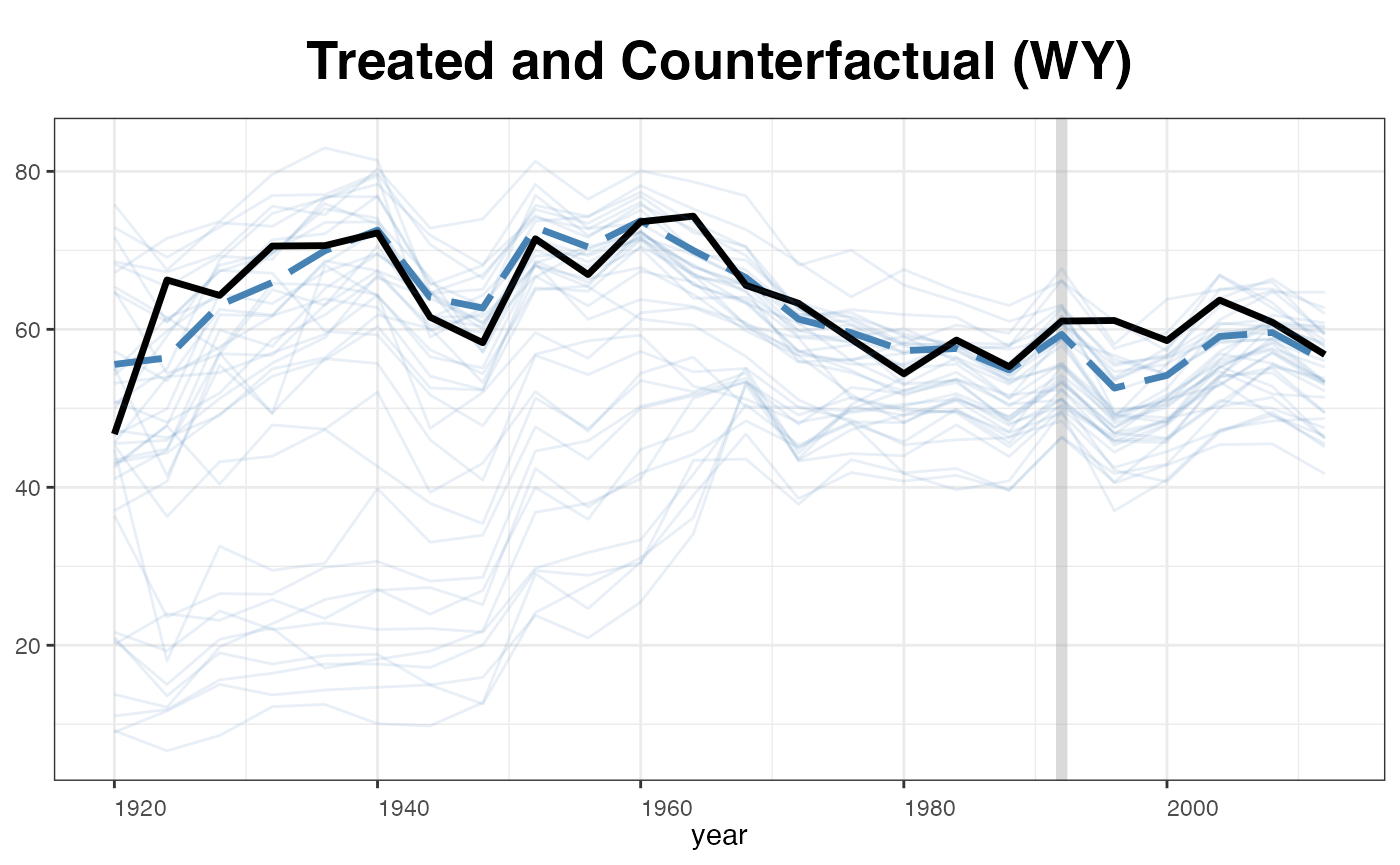

plot(out, type = "counterfactual", id = "WY",

raw = "all", legendOff = TRUE)

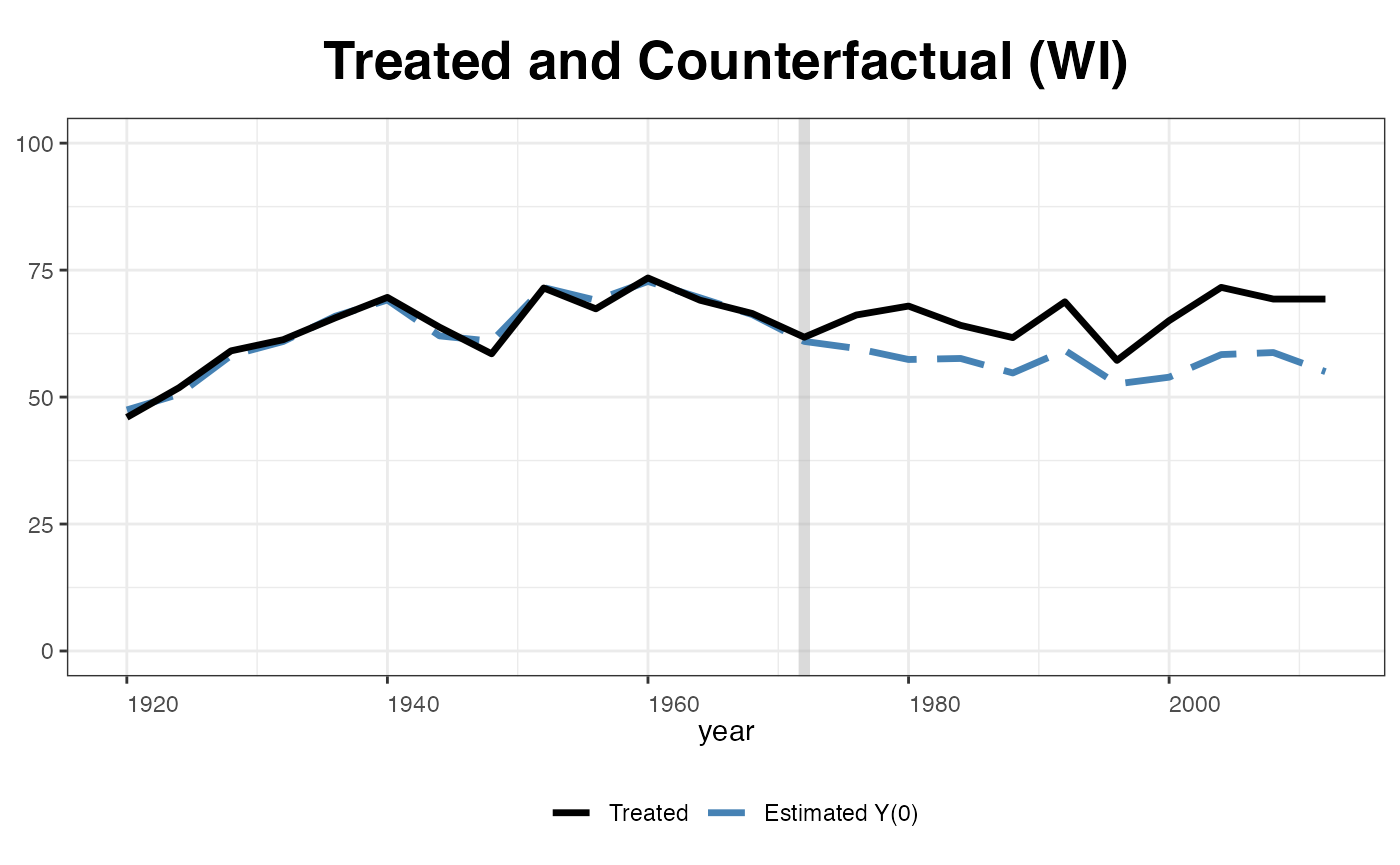

plot(out, type = "counterfactual", id = "WI",

raw = "none", shade.post = FALSE, ylim = c(0,100),

legend.labs = c("Wisconsin Actual","Wisconsin Counterfactural"))

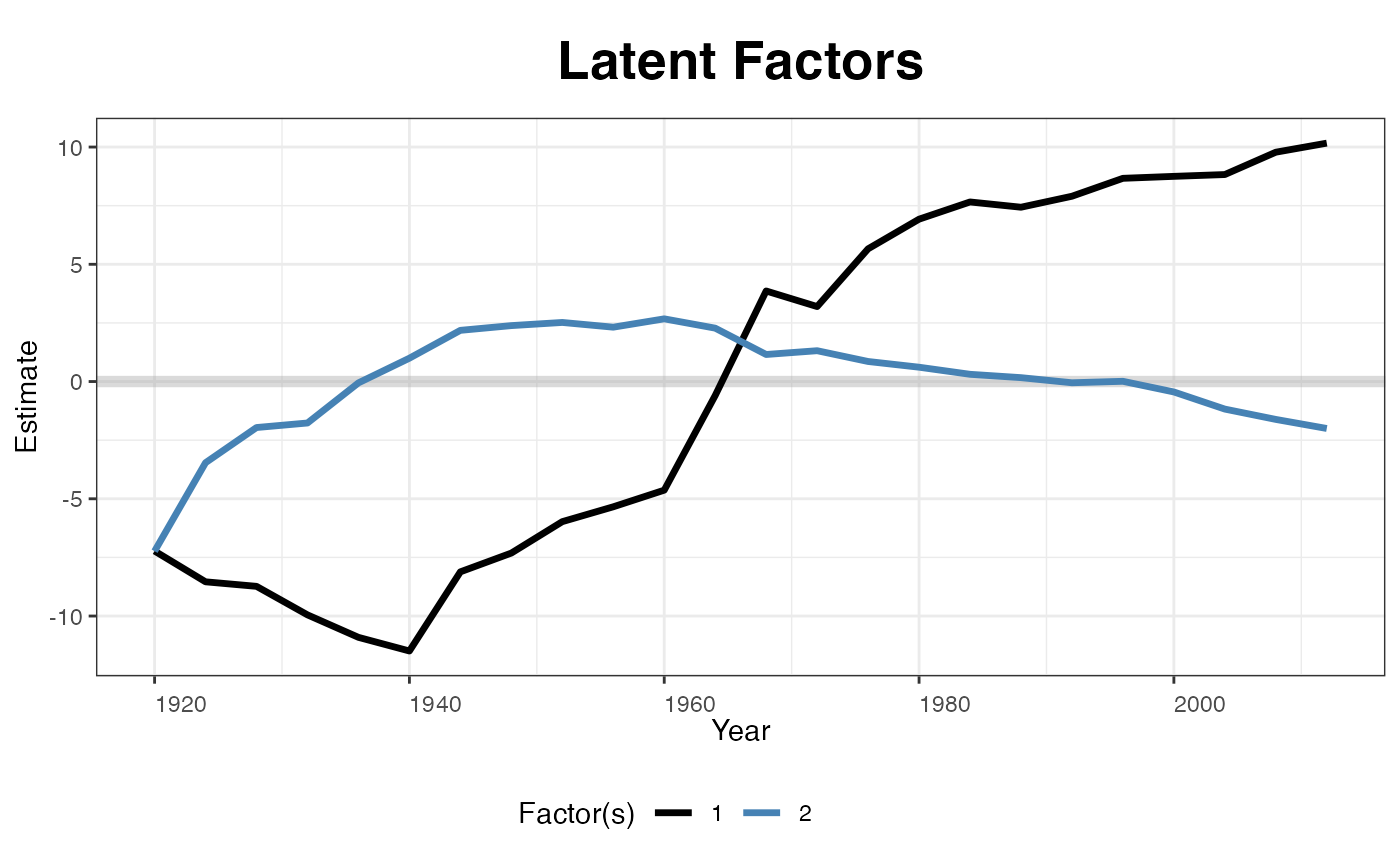

Estimated factors and factor loadings::

plot(out, type = "factors", xlab="Year")

plot(out, type = "loadings")

Unbalanced panels

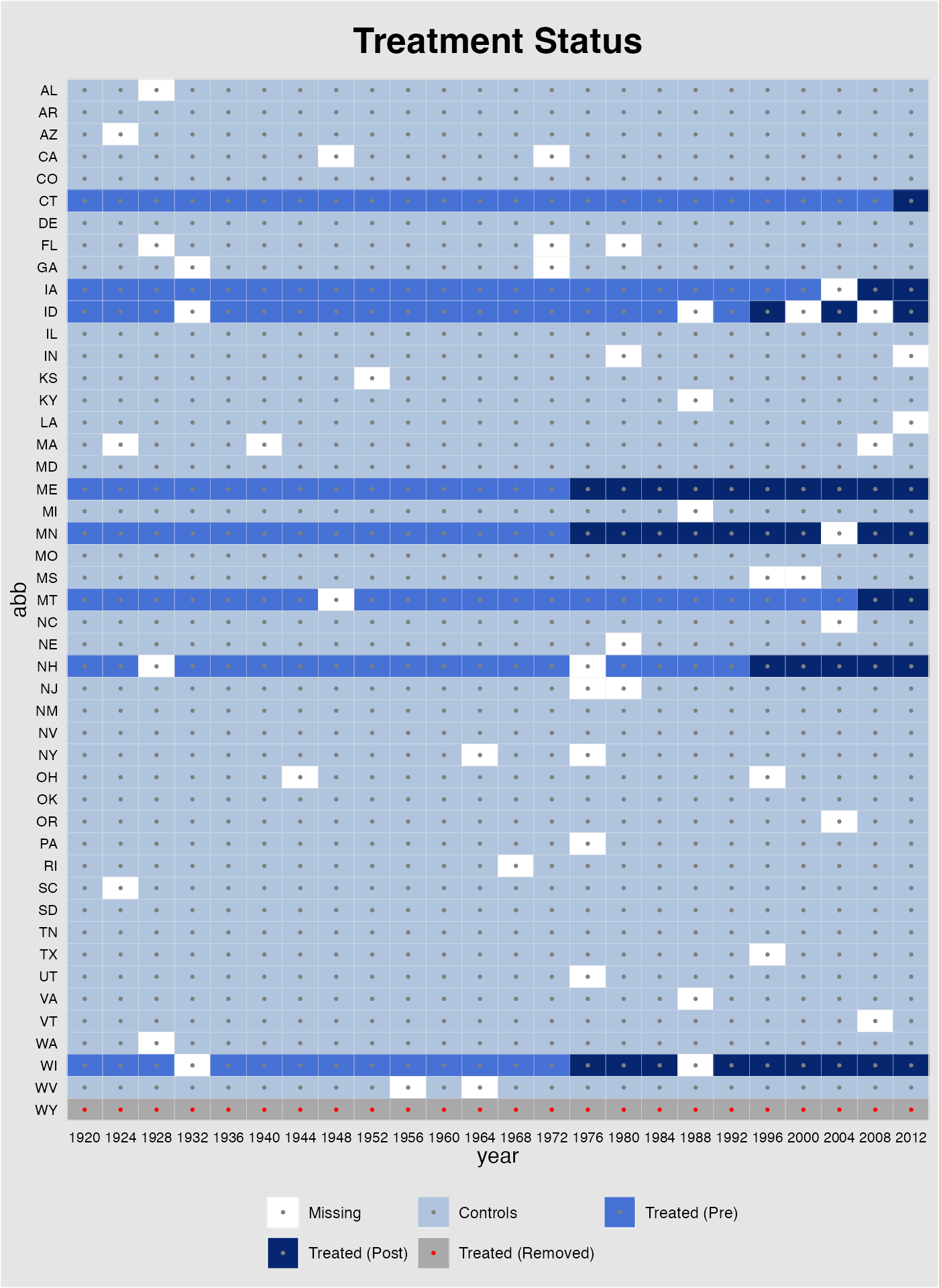

Starting from v1.0.7, gsynth can accommodate unbalanced panels. To illustrate how it works, we randomly remove 50 observations as well as the first 15 observations of Wyoming from the turnout dataset and then re-estimate the model:

set.seed(123456)

turnout.ub <- turnout[-c(which(turnout$abb=="WY")[1:15],

sample(1:nrow(turnout),50,replace=FALSE)),]Again, before running any regressions, we first plot the data structure and visualize missing values. In the following plot, white cells represent missing values.

panelview(turnout ~ policy_edr + policy_mail_in + policy_motor,

data = turnout.ub, index = c("abb","year"),

pre.post = TRUE)

Estimation

out <- gsynth(turnout ~ policy_edr + policy_mail_in + policy_motor,

data = turnout.ub, index = c("abb","year"),

se = TRUE, inference = "parametric",

r = c(0, 5), CV = TRUE, force = "two-way",

parallel = TRUE, min.T0 = 8,

nboots = 1000, seed = 02139)

#> Some treated units has too few pre-treatment periods.

#> They will be automatically removed.

#> Parallel computing ...

#> Cross-validating ...

#> r = 0; sigma2 = 78.53850; IC = 4.85229; PC = 72.87077; MSPE = 19.00413

#> r = 1; sigma2 = 13.71115; IC = 3.56457; PC = 21.68716; MSPE = 11.91054

#> r = 2; sigma2 = 8.64454; IC = 3.54545; PC = 19.34554; MSPE = 7.61167

#> r = 3; sigma2 = 6.52720; IC = 3.69114; PC = 18.90508; MSPE = 7.56166*

#> r = 4; sigma2 = 4.83105; IC = 3.80135; PC = 17.18456; MSPE = 15.96018

#> r = 5; sigma2 = 4.16136; IC = 4.04774; PC = 17.56161; MSPE = 15.64924

#>

#> r* = 3

#>

#>

Simulating errors ...

Bootstrapping ...

#>

list of removed units: WYPlot results

The missing plot visualizes the data being used in the regression (similar to that from turning on quick_missing in the main function). It is different from the plot generated from panelview in that observations for Wyoming are now represented by gray cells with red dots to indicate that they are removed from the sample because of insufficient number of pre-treatment periods. The missing data matrix can also be retrieved from out$obs.missing, in which 0, 1, 2, 3 4 represent missing values, control observations, treated (pre-treatment) observations, treated (post-treatment) observations, and treated observations removed from the sample, respectively.

plot(out, type = "missing")

Like in panelview, users can plot part of all units by specifying id and adjust labels on x-axis when the the magnitude of data is too large.

plot(out, type = "missing", xlab = "Year", ylab = "State",

main = "Treatment Status", id = out$id.tr,

xlim = c(1920,2012), axis.adjust=TRUE)

We re-produce the “gap” plot with the unbalanced panel:

Matrix completion

Finally, we re-estimate the model using the matrix completion method:

out.mc <- gsynth(turnout ~ policy_edr + policy_mail_in + policy_motor,

min.T0 = 8, data = turnout.ub,

index = c("abb","year"), estimator = "mc",

se = TRUE, nboots = 1000, seed = 02139)

#> Some treated units has too few pre-treatment periods.

#> They will be automatically removed.

#> Parallel computing ...

#> Cross-validating ...

#> lambda.norm = 1.00000; MSPE = 111.41839

#> lambda.norm = 0.42170; MSPE = 47.92701

#> lambda.norm = 0.17783; MSPE = 22.54666

#> lambda.norm = 0.07499; MSPE = 14.65412*

#> lambda.norm = 0.03162; MSPE = 15.40772

#> lambda.norm = 0.01334; MSPE = 23.22015

#> lambda.norm = 0.00562; MSPE = 53.92629

#> lambda.norm = 0.00237; MSPE = 107.19545

#> lambda.norm = 0.00100; MSPE = 109.61991

#> lambda.norm = 0.00000; MSPE = 111.41839

#>

#> lambda.norm* = 0.07498942

#>

#>

Bootstrapping ...

#>

list of removed units: WY

Additional Notes

Running gsynth with unbalanced panels will take significantly more time than with balanced panels (the magnitude is often 100:1 or even higher). This is because the EM algorithm that fills in missing values (also written in C++) runs many more loops. This means users can often significantly reduce run-time by removing some units or time periods that have many missing values. Knowing the data structure before running any regressions always helps!

Similarly, adding covariates will significantly slow down the algorithm because the IFE/MC model will take more time to converge.

Set

min.T0to a positive number helps. The algorithm will automatically discard treated units that have too few pre-treatment periods. A bigger \(T_{0}\) reduces bias in the causal estimates and the chances of severe extrapolation. If users run cross-validation to select the number of factors,min.T0needs to be equal to or greater than (r.max+2).